Netpeak Spider: An advanced website analysis tool

Today, most businesses have their own online presence. This solution allows you to attract more target audience, which positively affects the level of income. The fact is that people are used to looking for the goods or services they need on the Internet. But at the same time, it is important to understand that the effectiveness of your resource will directly depend on how professionally it is created. Despite the fact that artificial intelligence is actively develops, affects all large areas of human life, the creation of sites, their optimization and promotion is still done by a person. So far, AI cannot fully replace it.

Creating and promoting the resource — complex and voluminous work, involving a deep analysis, a detailed study of each aspect. And this is a waste of time, effort, mistakes, which will subsequently make themselves felt and require adjustments. But all this can be minimized by additionally connecting the Netpeak Spider crawler to the work. This is a reliable modern tool that will help you perform internal site optimization, collect semantics, and help organize data. Let's take a closer look at how this application works.

Netpeak Spider features

Netpeak Spider is a desktop application that scans Internet resources and analyzes key parameters of internal optimization in order to identify errors and inaccuracies that hinder successful development. With this tool, you can perform a comprehensive SEO audit of the site to control the results after the changes made. You can also use it to collect data from competitors' websites. Mistakes made in filling in meta tags, broken links, duplicate pages and other errors that have a negative impact on the indexing of the site — it's not a problem anymore. Netpeak Spider quickly identifies them, and you can fix them, thereby improving your resource's position in the search results.

First of all, you need to install this program on your computer. If you are just planning to get acquainted with it, then you can opt for the free version. You can use it for 2 weeks. This time will be more than enough for you to be convinced of the usefulness of this program for your future work. Next, you will have to purchase the paid version and use it without any restrictions.

Now let's take a closer look at the program itself. Get acquainted with its interface, functionality, basic settings and additional tools.

Netpeak Spider interface

The best way to get to know Netpeak Spider is by example. Take a small site as a basis and scan it. To do this, you just need to enter the URL of the resource in the appropriate line and click on the "Start" button. — black circle with a white triangle in the middle. It’s not worth it to get into the settings for the first time, so we recommend leaving all the parameters as they are, that is, “by default”.

Now let's consider separately such interface elements as:

- Dashboard.

- Segments.

- Reports.

Dashboard

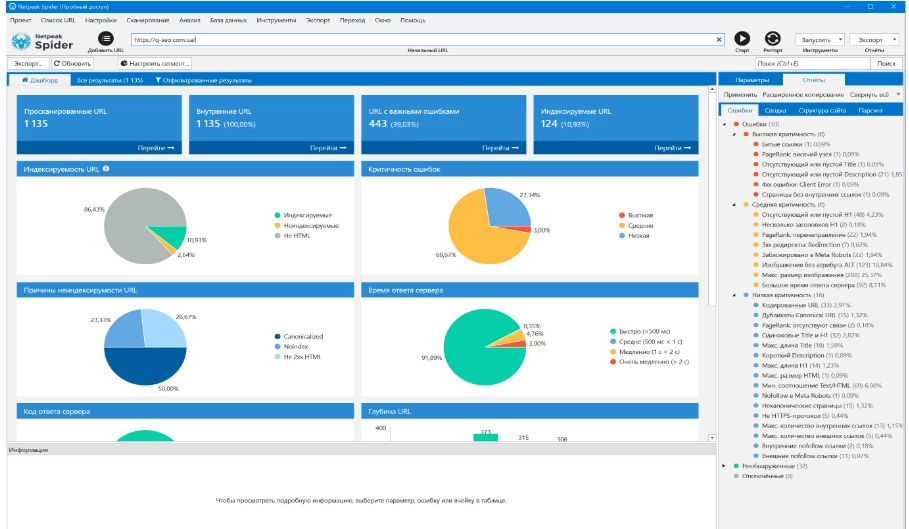

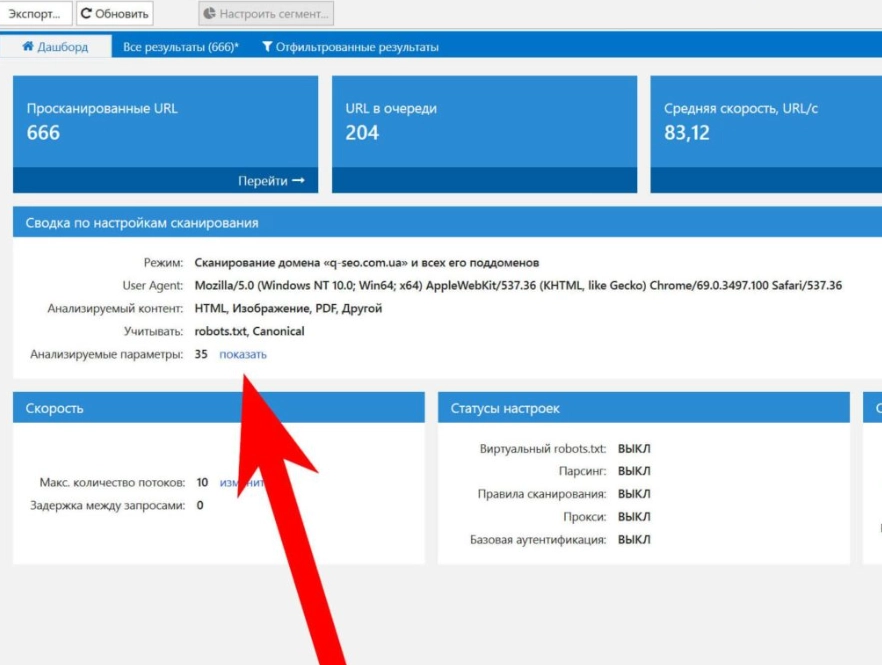

Dashboard is located in the application on the left side of the working window. This is where the key parameters will be concentrated. As soon as you click on any of them, reports with identified errors will open in the right part of the window. The main parameters that are presented in the dashboard:

- Crawled URLs. These are the addresses of all those pages of your resource that the bot walked through. These will be all those pages that the robot managed to see. If you click on the corresponding link, you will open the full list of analyzed addresses, as well as all the data that was received from them. Addresses in which serious errors have been identified will be highlighted in red. If the severity of the problem is medium, then the backlight will be yellow. If the comments are small and recommended for execution, then the backlight will be blue. To get more detailed information about the corresponding page, you just need to click on it. In the block "Information" you will see all the necessary data.

- Internal URLs. We would like to draw your attention to the fact that in the "default" settings does not provide scanning of external links. And that is, the number of scanned pages of their pages must match the number of internal addresses.

- URL with key errors. This will filter out those page addresses where the search bot found serious problems.

- Indexable URLs. This block will contain the addresses of all those pages that the search engine you use when scanning is ready to index. That is, they fully meet all the requirements. You can choose the right bot for yourself through the User Agent settings.

One more thing we want to draw your attention to is that you can see the analyzed parameters directly during the scan. To do this, you need to click on the word "Show" next to the line Analyzed parameters.

Once the scan is completed, all information will be structured and presented to you in the form of visual diagrams. So you can visually see the ratio of indexed and non-indexed addresses, the results of the check by server response time, by received response codes, by content type and many other parameters. Please note: all chart elements are active. This means that you can simply click on them to go to a more detailed study of the data.

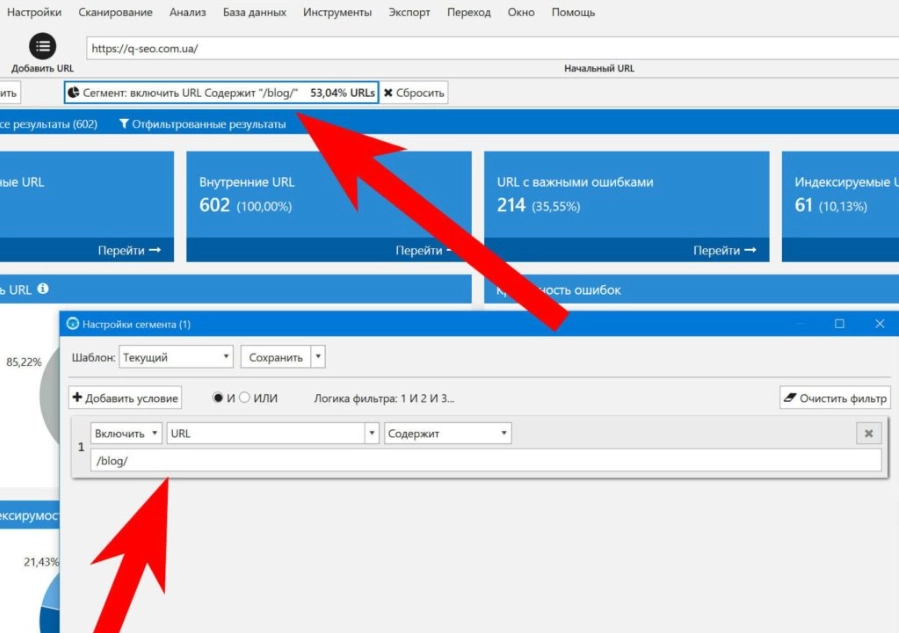

Segments

Here everything is also quite easy and simple. If you have already worked with setting up a segment in Google Analytics, you will be able to complete the work here as quickly and simply as possible. That is, you set the parameters, and the program selects the relevant data and passes it to you for further study. This option will be very convenient in the process of analyzing errors found on the resource. You yourself choose those segments that you want to study in more detail. You can also create these same segments based on what tasks you currently face. So, if you work with an information portal, then you can separately select a segment such as a blog, and if you work with an online store, then separate categories of goods, etc.

Reports

All information collected by the program as a result of scanning your site will be grouped in the right part of the working window. There are several separate tabs here:

- Errors. The same color marks that we talked about above are present. That is, critical errors will initially go, sorted in red, then — medium criticality (yellow), and already at the very bottom— low criticality (blue). Such a division will be very convenient for perception. You will immediately see which pages need more serious work, and which — leave for later. To see specific data for each option from this list, you just need to click on it.

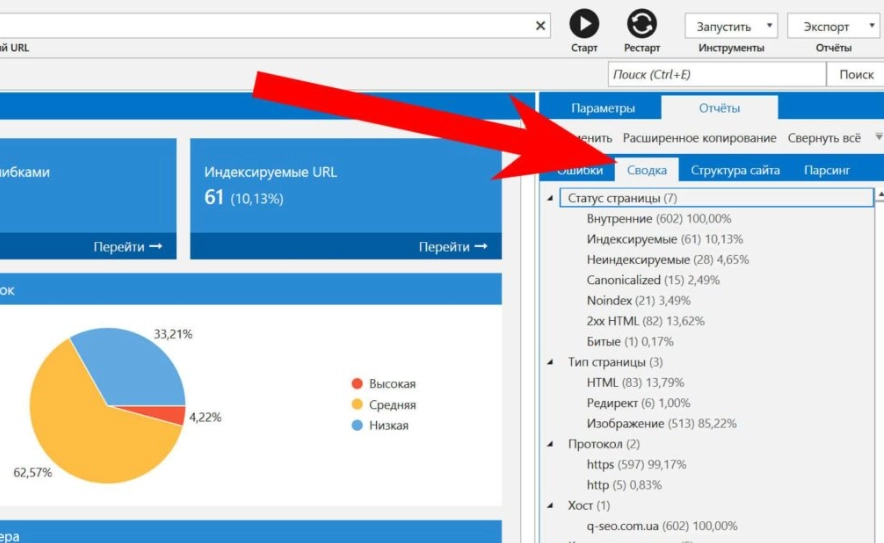

- Summary. If you go here, you can quickly access those data that relate to pages of a certain type and status. In principle, similar parameters are also displayed in the dashboard, but here they are more structured and more convenient for perception and subsequent work.

- Site structure. in this tab you will find documents related to a specific group of pages (relevant for cases where this allows you to implement the site's URL structure). The information presented here will be useful to you when analyzing your direct competitors. So you can find out what products they have on sale in a particular category. The information obtained will allow you to optimize your own product catalog.

- Parsing. In this block, you can get acquainted with the data collected from competitor sites, including queries that are relevant for certain pages of your resource.

All parameters that are in the "Reports" block you can easily export as .xls or .csv files. If you use the "Advanced copy" option, you can immediately transfer their clipboard. Save the entire project for yourself if you plan to continue to analyze the site in the future. This way you can avoid having to rescan.

Making settings in Netpeak Spider

Above, when we considered the features of the Netpeak Spider interface, we left the "default" settings. But in the work, you can make your own changes, adjusting them to the features of the upcoming workflow. You must not forget that we are talking about a program focused on professional use. And this means that its functionality has a fairly large range of settings for any scanning parameters.

To make it easier for you to navigate the settings of Netpeak Spider, let's look at the main options. All of them are listed in the vertical menu on the left side of the working window:

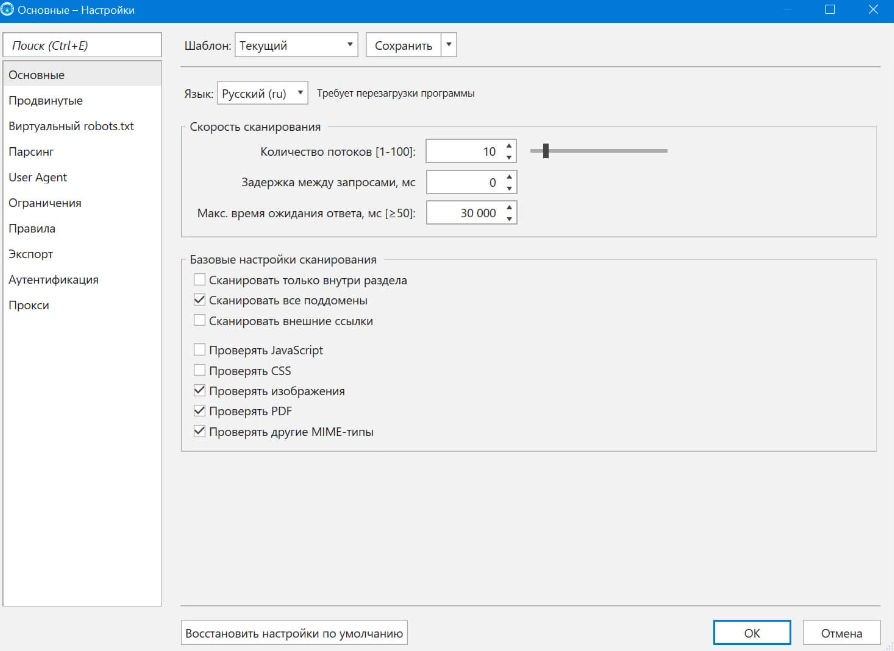

- Main. This block contains such settings as the program language, the number of scanning threads that will be performed simultaneously, the allowable waiting time for a response from the server. In the "Basic Scan Settings" block you can mark the functions that you need when performing work at a given time. So, if you select "Crawl all subdomains", then the program will scan absolutely all pages of your site. The "Scan only within a partition" option involves crawling by the robot of pages that are not included in the section you have selected. Another option — "Scanning external links". Here you can also select the content that the program will process. You choose as many options as you need to complete your task.

- Advanced. Already from the name it is clear that such settings require a deeper understanding of the work of search bots, the principles of their directives. Here you can take into account, or vice versa, ignore a number of rules. So you mark the options you need from the blocks "Consider indexing instructions", "Crawl links from the link tag", "Automatically pause crawling". You can also allow the program to use cookies, crawl the content of pages where there is error 4хх.

- Virtual robots.txt. In this block, you can connect your own virtual robots.txt to work, and not a physical file hosted on the server. This option will be useful if for some reason you cannot access the site files. It should also be used when you need to check for changes in robots.txt until the file itself is updated.

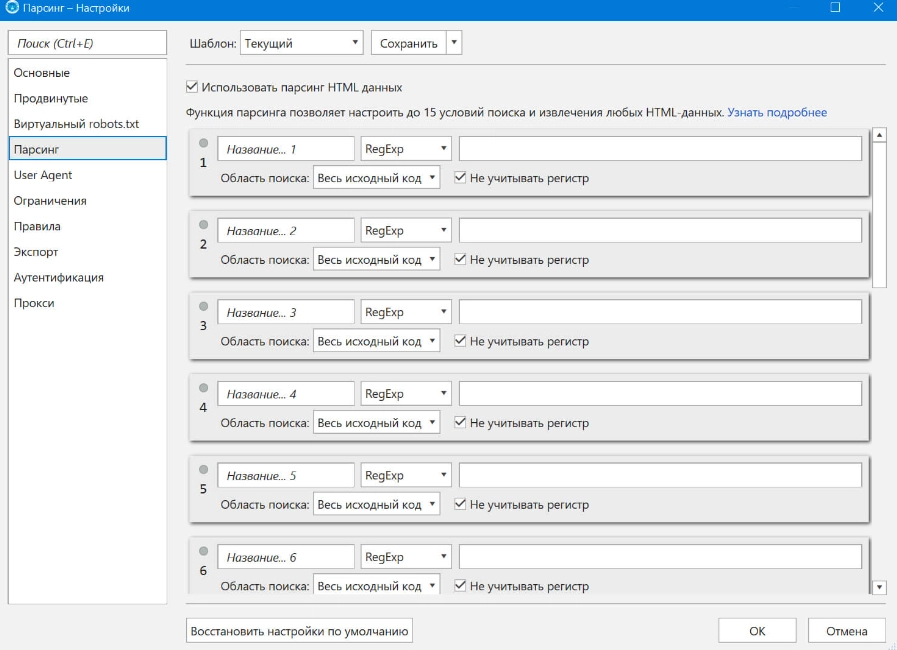

- Parsing. This is a very handy tool for collecting data from large sites. Those that have a multi-level branched structure. Under such conditions, you can very quickly find pages that do not have hreflang attributes, no Google Analytics code, etc. It also allows you to collect data from competitors' resources, which will allow you to get information about their products and prices. You'll get the most out of using this feature if you learn how to use CSS selectors and regular expressions.

- User Agent. Using these tools, you can see the site the way search bots see it. That is, you will understand which pages will be scanned and which will remain invisible to crawlers. Additionally, it will be possible to connect a Google bot for a smartphone to check how the resource meets the requirements for mobile versions of sites.

- Restrictions. Here you can set a number of settings for the work of crawlers, namely, set restrictions on their work. This will allow you to set the block to certain options that the search bot might write errors.

- Rules. Here you can set the settings that allow you to direct search bots only to the pages you need. Thanks to this, you will get a selective picture that interests you with minimal time wasted. To set the rules, you can use the logical operators AND or OR. Based on the first, complex rules are formed, under which several conditions must simultaneously coincide. OR — this is a simpler logical operator that assumes that only one condition is true.

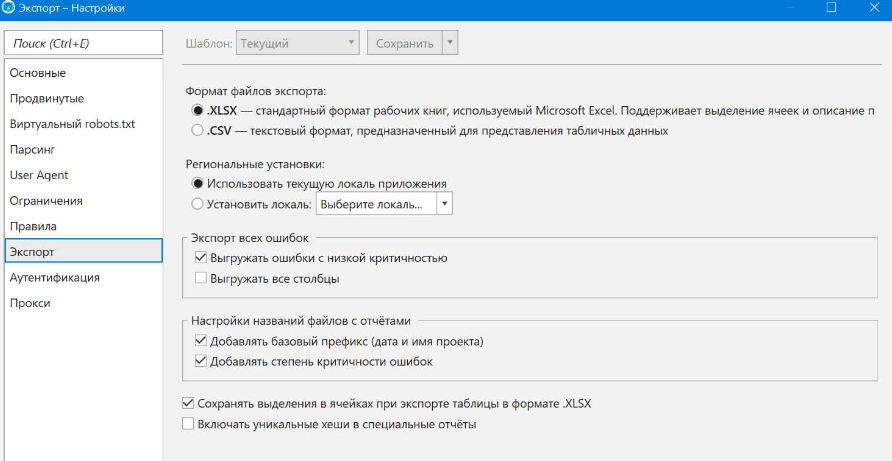

- Export. In this block, you specify how you want to receive reports. So, it can be an Excel file, made in the form of a table, for the same is a classic text file (also tabular). Additionally, you can set regional settings, how to unload all errors, settings for file names with reports, etc.

- Authentication. If you are working with a site that is not available by default for the bot you have chosen, then you must additionally set authentication settings. This will be especially relevant in the case of checking a resource that has not yet been put into operation, that is, at the stage of its testing.

- Proxy. The fact is that many foreign hostings today block IP addresses with Russian geolocation. This means that you cannot parse such resources. To bypass these restrictions in this block, you need to connect mobile proxies to work. Such an intermediary server will replace your real data with your own from the desired geolocation, which will allow you to access any resources. Also, working through mobile proxies, in particular from the MobileProxy.Space service, will provide you with confidentiality and security of actions on the Internet, the ability to work in multi-threaded mode and many other advantages. More details about the functionality and features of mobile proxies can be found at https://mobileproxy.space/user.html?buyproxy.

Netpeak Spider additional tools

We have already said that the capabilities of Netpeak Spider are quite wide. So, along with the settings that we described above, you can also use the additional features of this tool, namely:

- Parse source code in combination with http headers. The program will save the source code of any of your pages, extract text content from it, write data from the http header in the server response. Thanks to this, you can always go back a step if the new settings are not very successful.

- Calculate internal PageRank. Thanks to this tool, you can determine how much weight a particular page of your resource has received thanks to internal links. So you can optimize the internal structure of your resource, make changes to the linking in order to draw the attention of search bots to the most important pages of the site.

- Check if the sitemap is correct. To solve this problem, an XML Sitemap validator is used. To do this, you just need to specify the address of your sitemap, and the program will check it, determine the correctness of filling, the presence of duplicates.

- Create a sitemap. If you don't have a sitemap yet at the moment, you can automatically create it using the XML Sitemap Generator. Do not forget to set the parameters that will be your priority.

Summing up

As you can see, Netpeak Spider — quite functional, but at the same time a simple and easy-to-use tool. If necessary, you can pause scanning at any time and continue it after a certain period of time by simply clicking on the "Start" button. It will also be possible to rescan individual URLs or lists in the event that you have identified an error, corrected it and now want to check if you succeeded in doing it. That is, you do not necessarily need to re-crawl the entire site. This is especially true for those pages that are highlighted in red or yellow in the results. Also, directly in the workflow, you can make adjustments to the scanning parameters, removing unnecessary options and adding those that will be useful at a given time.

But for all its usefulness and functionality, the work of Netpeak Spider can be significantly curtailed by various restrictions established in a particular country at the legislative level or at the level of providers. To avoid this, do not neglect the additional connection to the work of mobile proxies, in particular from the MobileProxy.Space service https://mobileproxy.space/user.html ?buyproxy. You will find stability, functionality, reliability, favorable tariffs, 24/7 technical support. With such an assistant, your work as an SEO specialist will be as comprehensive and fast as possible. You will soon be able to see your site at the top of the search results.