Competition and parallelism in data collection: features, differences

The article content

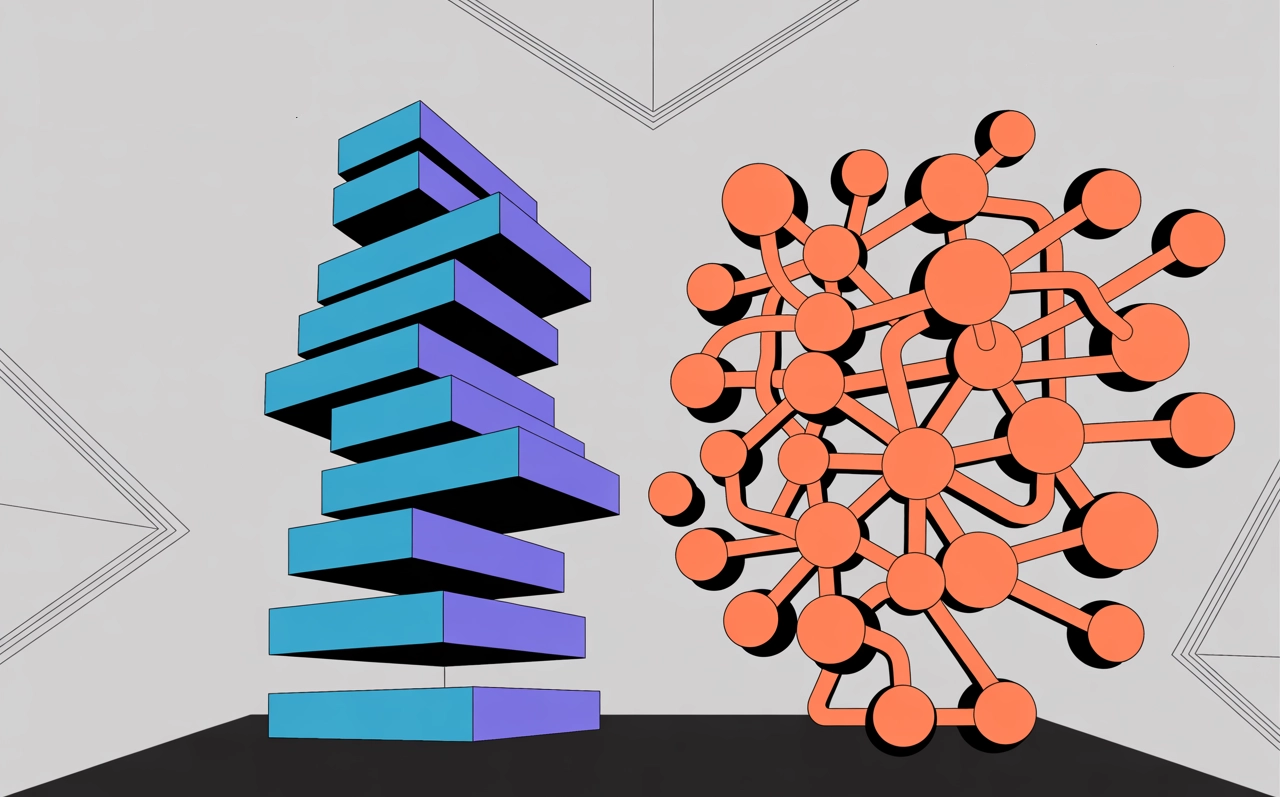

If you work in the field of IT technology, then you have probably already heard of such concepts as competition and parallelism. And many of you still think that both of these terms are synonyms. But this is absolutely not true, although the purpose and specificity of both options are quite similar. The fact is that most modern software systems, regardless of what niches they are intended for and under what conditions they are operated, must withstand increased loads, be highly efficient and durable in operation. This is relevant for databases, server equipment, and even for tools designed for data parsing, that is, for automated collection of information.

In today's review, we will talk directly about the use of concurrency and parallelism in web scraping. Here, these technologies ensure the most harmonious distribution of power and resources, performance optimization, which ultimately has a positive effect on the efficiency of the automated system as a whole. In practice, both of these concepts are often interchanged by specialists, which is fundamentally wrong. The fact is that concurrency and parallelism have different approaches to simultaneous data processing, although they are used by developers to create responsive and scalable automatic solutions in the field of data collection.

Now we will dwell in detail on what each of these technologies is, we will tell you in which cases it is worth using one or another option in practice. We will consider illustrative examples that will allow you to more closely navigate these methods, see their differences. We will also tell you how to optimize work processes using these solutions. We will highlight the main differences and tell you how you can combine these methods to get maximum results in practice. The information presented will allow you to more closely navigate the issues of concurrency and parallelism and use these methods in practice as correctly as possible.

The essence and features of concurrency

We will begin our acquaintance with such concepts as concurrency and parallelism directly with concurrency. So, in this case, we are talking about a technique designed to organize the automatic execution of tasks, within which several work processes or data streams will take on some of the computing resources, but at the same time, their simultaneous execution will not be observed. That is, in practice, we get a solution in which the processor will switch between different tasks as quickly as possible. As a result, the effect of simultaneous work will be created, but as such, in essence, it will not be.

That is, the first thing you need to understand about concurrency is that in reality, only one processing will be performed at any given time. Where did this name come from? The fact is that during the execution of these works, the tasks will literally compete with each other for processor resources. This feature makes this technique optimal for performing a wide range of tasks related to the I/O category. Quite often in practice, they are also called input/output operations. This can rightfully include any work and processes in which the program will send single or batch data or be in the mode and wait for them. In essence, data parsing, or as we often call it, web scraping or automated data collection, is the most obvious example of such a task.

Using the principles of competition in solving it assumes that the scraper program working on collecting information will not wait until the system processes one of the requests. It turns out that it simultaneously sends a large number of such requests, and then they are processed, competing with each other in terms of priority. As a result, we get a more than noticeable acceleration of information processing.

When developing such a technique as concurrency, specialists initially set themselves the task of increasing the responsiveness of the system. It was possible to implement this idea by setting up almost instant switching between different tasks. Due to such efficiency of work, many people have the opinion that the server or processor processes such requests in parallel. But this is an illusion. There are no different tasks being performed at the same time. It is just that the switching is so fast that such an effect is created.

So in what cases should concurrency be used in practice during automated data collection? Here, several 3 key areas can be distinguished:

- Processing a huge number of network connections. A similar situation arises in practice when the server is faced with the need to provide responses to requests coming from thousands of different users simultaneously.

- Managing work processes within operating systems. In this case, the computer is able to simultaneously run several programs, but the processor time of each of them will not be provided immediately, but in turn. That is, while one task is running, the second is in standby mode.

- Launching tasks in the background. Specialists who work in the field of information technology, in practice quite often use this solution to speed up work. Alternatively, messages can be processed in the background, or certain information can be loaded. It is noteworthy that in parallel with this, the main logic of the program will be executed stably, that is, background work will not block it.

All these processes are implemented by multithreading. It is this technology that underlies concurrency. Now let's talk in more detail about how all this is implemented in practice. And let's start with getting to know the threads as such.

What are threads in concurrency?

By such a term as a thread, in this case we mean a separate sequence of command execution within the same processor. Since we are talking about concurrency, then in such systems one process can include several threads. In their work, they will simply use the processor time in turns, creating a kind of competition for working resources. To make it clearer how this works in practice, consider the following example:

- The program makes a network request and waits for a response from the server.

- In order not to stand idle and not to waste time, the processor switches to another thread here. Alternatively, at this point in time it can process a set of data that is in standby mode.

- As soon as the server provides a response to the network request, the first thread returns to execution.

What do we get as a result in this case? Stable operation of the system is ensured, but at the same time, idle time of the processor in anticipation is excluded, and its computing resources are distributed as efficiently as possible between different working threads. This is the essence of competition.

That is, it is important to understand that the threads are This is one of the key mechanisms in the implementation of this technology in relation to automated data collection systems. Here we get that the flow acts as the smallest unit of process execution. That is, most of the work processes will be a set of several tasks, each of which is a separate flow.

As a result, all processor time is divided between all the flows included in one task, after which the process of their sequential execution is launched. That is, the competitive concept is about the highest quality and optimized use of processor resources. So, today there are many programming languages, operating systems equipped with tools for managing flows. Among other things, these are tools for creating, synchronizing, and pausing execution. The highest priority for using flows will be in tasks related to automated information collection. The fact is that here it will be necessary to simultaneously process a large number of incoming sources, but at the same time minimize the delays in the execution of each process, thereby increasing productivity.

A practical example of using concurrency in data parsing

If we talk directly about practical application, then concurrency can rightfully be called an integral element of modern software systems, including those used to perform web scraping. With its help, it is possible to organize the effective execution of a huge number of processes even within the framework of seriously limited resources. And here a fairly clear example will be the processing of several requests coming to the Internet server.

To make it clearer how all this works in practice, let's consider a couple of examples. Let's say you have an online clothing store. In practice, it will be common for different users to place orders, request information about the delivery status, or at least simply view products at the same time. Even the most powerful server will not be able to process all these requests simultaneously. The fact is that the number of processors is limited and clearly less than the number of incoming requests.

And here the principle of competition comes into play. It turns out that the execution time is distributed between different tasks. The server switches between processing the request of one user and another as quickly as possible. That is, while one person enters their contact information and related data in the order form, the processor will send the other buyer additional requested information about a particular product. As a result, these 2 processes will alternate between each other, that is, they will be implemented in turn, without waiting for one of them to be completely completed before launching the second. As a result, we get the fastest possible response from the system, minimized time delays, and the illusion of parallel execution that we have already discussed above.

If we talk directly about data parsing, another example will be relevant here. Let's say you want to study your business's competitors and would like to collect information from their official online representatives. Let's say the total number of sites from which data is to be collected is 50. If you do not use the principle of competition, the system will download information first from one site, and after this process is complete, it will move on to another. And this will continue until information from all 50 sites has been downloaded. And this is a considerable investment of time.

This process can be easily optimized if you apply the competition method to it. In this case, let's say 10 requests will be sent for processing initially. And at the moment when the first pages are still loading, the previously received information will be processed at the same time. Thanks to this, the speed of data collection will increase significantly, and the total time to complete such a task will be reduced to a minimum.

Methods for optimizing automated data collection using concurrency

Competent application of the concurrency principle in practice guarantees higher performance indicators of the automated data collection system. This is largely ensured by optimal management of time costs for solving certain tasks. In this case, increased productivity is ensured by a combination of the following solutions:

- Minimizing downtime. While waiting for a response from the server, the processor will not be idle. It will instantly switch between current operations, without waiting for one of them to be fully completed.

- The ability to build effective scaling of the system. Practice has repeatedly proven the ability of competitive systems to simultaneously process hundreds or even thousands of threads while maintaining the initially high speed.

- Significant reduction in the load on the system. Here, there is no need to allocate separate resources for each task. All connections will be processed by the same computing power.

Methods for implementing concurrency

Several methods can be used to implement the principle of concurrency in general. They are often combined with each other, ensuring maximum final efficiency. In particular, we are talking about the following methods:

- Asynchronous programming. The very essence of this technology is to organize such execution of tasks that would exclude blocking of the main thread.

- Load balancing. In this case, all thread processing operations will be evenly distributed across the processor capacity, which will eliminate the downtime of some elements and excessive load on others.

- Flexible thread management. Thanks to the correct organization of these works, the number of context switches between different tasks is minimized.

Since in this case we are talking about concurrency within web scraping systems, here, using asynchronous HTTP requests, information from different pages will be loaded simultaneously. This eliminates the need to form separate threads for each specific task. As a result, the load on processor capacity is significantly minimized, which leads to a noticeable acceleration of work. If you have already used the principle of concurrency in automated data collection in practice, then you probably noticed how quickly these tasks are solved.

Now let's move on to getting acquainted with another technology - parallelism.

The essence and features of parallelism

Parallelism is a technique designed for the simultaneous execution of several computing processes. To implement such a task, different hardware resources are used. And this is the fundamental difference between this concept and what we talked about above. That is, concurrency involves alternating tasks with imitation of simultaneous execution, but parallelism is already direct simultaneous execution. To implement it, multiprocessor systems are necessarily used. Without such hardware capabilities, it is impossible to implement this technique in practice. That is, with parallelism, individual tasks are simultaneously processed on different processor cores, and even on different servers.

This technology also has its own features and specifics of application. In particular, the use of parallelism in practice also allows solving the following tasks relevant to data parsing:

- Significant acceleration of those operations that require increased resources from the system. This includes machine learning, rendering of graphic elements, collecting and analyzing large amounts of data.

- Processing an impressive number of data streams literally in real time. Such speed in performing production tasks will be relevant primarily in processing videos when analyzing financial information and documentation.

- Increasing the efficiency of the practical use of modern multiprocessor systems. In this case, the entire incoming load will be distributed as evenly as possible between individual cores, eliminating instability in operation.

If you use modern processors based on several cores in your work, then you can easily connect parallelism to your work. The point is that in this case, the programs themselves will break down all incoming tasks into separate independent elements, and then launch them for simultaneous parallel execution. This is the key feature of parallelism and its fundamental difference from concurrency.

The principle of accelerating processes using parallelism

One of the most significant advantages characteristic of parallelism can rightfully be called a noticeable reduction in the time costs for the implementation of all processes that will be received for processing due to their correct division into parallel streams. Direct acceleration of information collection in this case will be ensured by the following technical solutions:

- Dividing large incoming tasks into separate components. That is, in this case, instead of performing large-scale work sequentially, step by step, the incoming data stream is divided into independent segments of approximately the same size and all of them are launched into work in parallel.

- Even distribution of the load on each individual processor core or server. This is what eliminates excessive load on some segments and downtime on other nodes. As a result, the system works as stably as possible, without freezes or failures.

- Automatic merging of results. As soon as the processing of each individual segment is completed, the system will automatically collect disparate elements into one document, providing the final result.

To understand how parallelism works in practice, let's consider one simple example. Let's assume that you need to collect information from 1000 pages. At the same time, the capabilities of your hardware allow you to process 1 page in 1 second. So, to get information from 1000 pages, you will need 1000 seconds. In this case, if you decide to use the opportunities that parallelism provides and split this task into 10 parallel threads, then as a result we will get that the entire processing will take: 1000/10 = 100 s. But if you use a distributed system, including at least 10 separate computers, then parallel processing will take even less: 1000/10/10 = 10 s. As a result, all the information from 1000 pages will be at your disposal in just 10 seconds. Agree, the temporary advantage of parallelism is more than impressive.

Directions where the use of parallelism will be more than significant

As in the case of concurrency, parallelism also has those directions in which its practical use will give maximum results in practice. In particular, this technology should be used in the following situations:

- in case of too intensive calculations, as an option where it is supposed to process images, video content;

- when using cloud services, as an option in case of generating requests directly from distributed servers to databases;

- when operating in systems operating under increased loads, among other things, where it is supposed to process transactions in multithreaded mode.

But here we would like to draw your attention to the fact that some tasks cannot be solved at all using this technique. The fact is that not every task can be divided at least approximately into uniform constituent elements and run in parallel processing. And there are options that cannot be separated at all. This is relevant in cases where the execution of one part will imply a direct dependence on another. As a result, the processing itself becomes more complicated, additional overhead costs arise, and the probability of obtaining inaccurate and even incorrect results at the output increases.

A brief comparison of concurrency and parallelism

Now that we have talked in as much detail as possible about such concepts as concurrency and parallelism, it is important to understand in which cases it is worth using one or another option, to understand what the key difference is between both approaches and what impact each of them has on the performance of the system as a whole. This is what will allow you to more thoroughly navigate the practical application of both options.

In particular, at this stage it is important to remember 2 key theses on these methods:

- Concurrency relies on the fastest and most efficient switching between different tasks. This creates the effect of their almost simultaneous execution, but there is no division into separate elements with subsequent parallel processing. That is, while one of the tasks is in standby mode, the other is processed.

- Parallelism initially assumes the use of multi-core processors or several devices. Here, one large task is divided into several separate elements that will be launched for processing in parallel. You will not be able to implement this technique without high computing power.

To make the difference between these 2 techniques even more clear and obvious, we will provide a brief comparison between them by various criteria:

- Implementation of tasks. In concurrency, it is assumed that different tasks are implemented sequentially without division into separate elements. In the case of parallelism, they are executed simultaneously, that is, until one answer is received, the second task will not be processed.

- Resource management. Concurrency can be implemented on devices with a single processor, while parallelism requires more powerful and large-scale software, such as several processors or one processor, but multi-core.

- Performance. In the case of concurrency, high performance indicators are provided by increased responsiveness of the system. With parallelism, we have a noticeable acceleration of the execution of one task.

- The type of tasks for which these methods are used. Concurrency should be used for those tasks that involve an input/output operation. That is, while one of them is waiting for a response, the second will be processed. Parallelism is great for implementing intensive calculations. It should be used where it is necessary to process huge amounts of data.

We hope that now you understand how different in essence both methods will be and that each of them has its own fundamental area of use. That is, if your computing power is quite limited, then you should pay attention to concurrency, since in this case you will be able to use it as efficiently as possible. But if the hardware capabilities are quite advanced, if the efficiency of solving the tasks is a priority for you, then you should focus on parallelism.

Combined solutions

Along with the fact that there are also gray shades in parallel with black and white, such a combination is also possible in the methods we are considering. Practice shows that in some areas, a combination of concurrency and parallelism gives much better results than using them as separate concepts. As a result, you have every chance to significantly increase the performance of your system, optimize its operation for the upcoming conditions. This solution will be especially relevant in the case of the need to solve complex, multi-level problems, work with applications that require increased responsiveness. Here, a combined solution will help optimize computing resources and have a positive impact on the speed of information processing.

Such hybrid solutions will be indispensable when processing large amounts of data. In such conditions, parallelism will distribute your task between several processors, and on each of them the principle of concurrency will already be implemented, which will ensure effective management of each of the operations on the spot. The combination of both concepts in workflows guarantees the following advantages:

- Increased data processing speed. Due to the parallel execution of individual elements of tasks in combination with switching between the tasks themselves, the response is significantly accelerated.

- Use the computing resources of your hardware to the maximum. In this case, each processor or individual core will work at full capacity.

- Guaranteed execution of even complex scenarios. Combining both methods allows you to ultimately get a solution that provides effective and flexible management of even complex, multitasking processes.

By combining competition and parallelism, you will be able to create scalable systems in practice that are highly efficient in the workflow. This will be relevant even for cases where you need to process huge amounts of data and solve resource-intensive tasks.

What approach should be used in practice for data parsing?

Recall that such techniques as competition and parallelism can be used today in various areas of information technology. But in today's review, we are talking directly about automated data collection. And in such conditions, the choice of a suitable technique will directly depend on what kind of task you are faced with in practice. Alternatively, the same competitive approach will be optimal in the case when you are not too keen on speed, and the hardware capabilities of your processor are quite weak. If you need the most intensive processing of a large number of pages, if you use multi-core processors in your work, choose parallelism.

But still, the optimal solution would be to use a hybrid approach. In this case, you can use concurrency to send asynchronous requests, while parallelism will be used to perform their subsequent processing. In this case, you will be able to visit all pages to collect data as quickly as possible, as well as process them quickly.

Summing Up

In today's review, we tried to cover the topic of two key methods used in data processing, namely concurrency and parallelism, as comprehensively as possible. We examined both options in detail, compared these concepts, considered the possibilities, areas of application, and provided practical conditions for use. They also talked about the features of the hybrid approach, in which both methods are combined with each other, which ultimately allows you to optimize the use of resources and maximize system performance due to uniform load distribution.

Which option should you use in practice? It all depends on the specifics of the task. Thus, concurrency is well suited for performing asynchronous operations, while parallelism is better suited for complex calculations. All these nuances should definitely be taken into account in practice in order to optimize your work with data collection and get the desired results as quickly as possible.

But here it is important to understand that anti-fraud systems can block your work on data parsing. Surely you know that search engines react extremely negatively to any automated solutions, applying quite severe sanctions to them. And in such conditions, mobile proxies from the MobileProxy.Space service can become your reliable assistant. They will provide a substitute for the real IP-address and geolocation of your device, launch simultaneous work on the HTTP(S) and Socks5 protocols, guaranteeing high levels of confidentiality, security of work on the network, effective bypass of various blockings and restrictions.

You can use this product absolutely free for 2 hours to make sure how technologically advanced, convenient and advanced the product is at your disposal. You can study the information about these mobile proxies in more detail at the link. You are guaranteed high stability of work, convenience, functionality, reasonable and justified rates, fast renewal, a variety of payment methods. If you have additional questions or need expert advice, the technical support service is available around the clock.