Digital Marketing Predictions for 2026: AI Agents, Privacy, First‑Party Data & Mobile Proxies

The article content

- Introduction: why 2026 will be a breaking point for digital marketing

- Foundations: core concepts for a confident start

- Deep dive: where the market is heading by 2026

- Practice 1: first-party data 2.0 — from consent to profit

- Practice 2: media and measurement in a post-cookie world — privacy sandbox, mmm, and incrementality

- Practice 3: ai agents in marketing — from pilot to operating system

- Practice 4: ethical data collection and testing with mobile proxies

- Practice 5: server-side analytics and conversions — the bedrock of accurate decisions

- Practice 6: creative engineering with ai — turning ideas into revenue

- Practice 7: an experimentation culture — deciding under uncertainty

- Common mistakes: what not to do in 2026

- Tools and resources: what to use and where to build skills

- Case studies and results: winning in the new reality

- Faq: 10 deep questions about 2026

- Conclusion: your 2026 action plan

Introduction: Why 2026 will be a breaking point for digital marketing

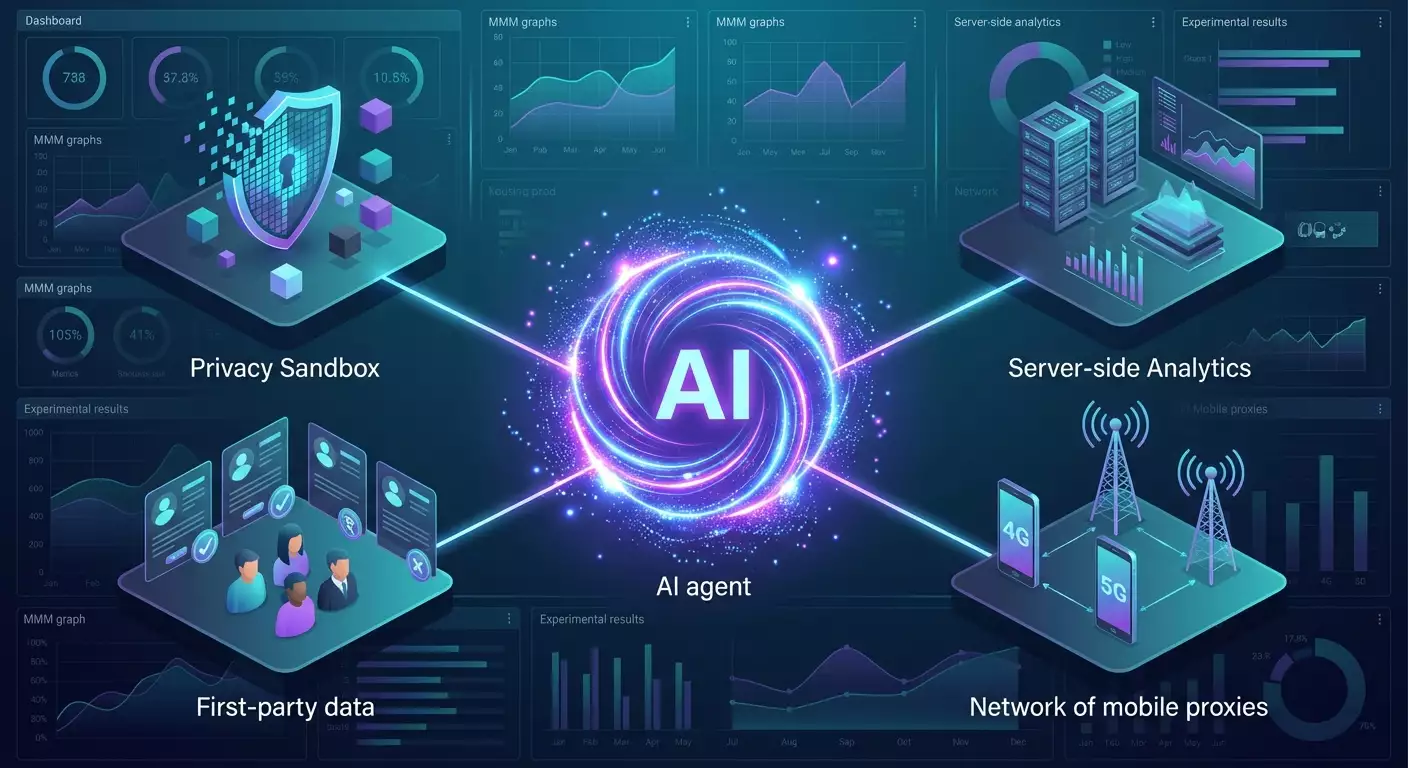

2026 will go down as the year three forces rewrote digital marketing’s rules: the mass adoption of AI agents in day-to-day operations, the market’s practical shift to a post-cookie reality with Privacy Sandbox and Consent Mode, and the new wave of first-party/zero-party data as the foundation for growth. And yes, there’s a quieter but critical layer: mobile proxies and lawful market data collection that power competitive intelligence, testing, and ad verification.

What should you expect? Budget reallocation toward retail media, contextual signals, and experimentation; a gradual displacement of “manual” buying by autonomous agents; analytics rebuilt around incrementality, MMM, and server-side attribution; and tough standards for privacy and ethics. This guide is about practice, not slogans: how to refit your stack, deploy AI agents, legally collect and validate data in the new environment, and profit from it all in 2026.

You’ll get strategic direction, technical how-tos, checklists, frameworks, a tool stack, and real, number-backed case studies. Ready?

Foundations: core concepts for a confident start

First-party vs. third-party data

First-party data is information you gather directly from users on your properties: site/app events, email, phone, survey responses, purchase history. Zero-party data is information users knowingly volunteer (e.g., preferences via a survey or customization). Third-party data is bought or aggregated from external providers, including via browser cookies. The world is moving to a first-party/zero-party data 2.0 model with explicit consent and clear value for users.

Cookie deprecation and Privacy Sandbox

Third-party cookies are exiting Chrome’s ecosystem, and the market is adopting the Privacy Sandbox and new APIs: Topics API (on-device interest topics), Protected Audience API (retargeting auctions without exposing identifiers), Attribution Reporting API (privacy-preserving conversion measurement), plus Consent Mode v2 and server-side event delivery. In 2025–2026, these mechanisms set the new norms.

Attribution and measurement

Universal truth: there’s no silver bullet. Attribution becomes hybrid: incrementality tests (geo/holdout), MMM (Marketing Mix Modeling), server-side event attribution via API (CAPI, Enhanced Conversions), and clean room capabilities. Instead of “perfectly tracking” individuals, the goal is to manage causality and profit.

AI agents vs. assistants

An AI assistant supports an operator. An AI agent acts autonomously within guardrails: it forms hypotheses, runs tests, refreshes creatives, adjusts bids, writes reports, and invokes tools via APIs. Keys to success: guardrails, human-in-the-loop, and metric controls.

Mobile proxies

Mobile proxies provide internet access through real 4G/5G mobile IPs. Use cases: ad verification, competitive intelligence, price intelligence, localization QA, anti-fraud. Crucial: data collection must comply with laws, platform terms, and ethical principles.

Deep dive: where the market is heading by 2026

Five structural shifts

- AI-native marketing ops: 30–50% of media buying and creative tasks are automated by agents. Companies shift from “manual control” to “control via KPIs and experiments.”

- Post-cookie stack: contextual targeting, Privacy Sandbox, retail media, server-side attribution, Consent Mode v2, consent-probability models, and synthetic control groups.

- First-party data as an asset: CDP, identity resolution, value exchange. Without this, acquisition costs rise and LTV/CAC falls.

- Retail media and walled gardens: a bigger share of the mix, broader audiences and signals. Brands with robust SKU analytics and supply-chain data have the edge.

- Lawful market data collection: mobile proxies + headless automation + anti-detect = clean panels for decisions instead of “shadowy scraping.”

Numbers and benchmarks

- Digital advertising already exceeds 70% of global ad spend, and by 2026 growth will be driven by retail media, CTV, and search with generative answers.

- Industry groups estimate that by 2024–2025, 60–80% of companies adopt server-side event delivery and Consent Mode v2; MMM and incrementality testing are growing at double-digit rates.

- Verticals most exposed to cookie deprecation: e-commerce, travel, auto, and finance—where clean rooms and server-side attribution are scaling fastest.

Bottom line: winners combine rigorous data governance, operationalized AI, and an experimentation discipline.

Practice 1: First-party data 2.0 — from consent to profit

Goal

Build a reliable system to collect, enrich, and activate user data with explicit consent, lowering CAC, lifting LTV, and improving model accuracy despite the loss of third-party cookies.

The VALUE framework

- V — Value exchange: what users get for their data (personalization, perks, priority support, premium features).

- A — Ask smart: ask selectively (zero-party micro-surveys after key events, contextual preferences, progressive profiles).

- L — Legal & consent: transparent policies, Consent Mode v2, purpose categories (storage, personalization, measurement, ads).

- U — Unified identity: email/phone as keys, hashing, ID graph, server-side deduplication.

- E — Enablement: CDP, server-side tagging, audience APIs, channel integrations.

Step-by-step

- Audit data and consents: inventory collection points (web, app, offline), event map, consent status. Find duplicates, unused events, and orphaned pixels.

- Move to Consent Mode v2: set up consent signals and tags so that without consent only aggregated/delayed signals are sent; with consent, full data flows. Verify policy compliance.

- Server-side tracking: deploy a server container (e.g., GTM Server-Side or similar), a proxy for events (collect endpoint), sources: web, app, CRM.

- Unified ID: implement hashed email/phone (SHA-256), device binding, and deduplication at the user_id level.

- CDP: choose a CDP (or DIY on your DWH) for profiles, segmentation, activation, and user rights handling (deletion/export).

- Zero-party flows: trigger micro-surveys (after 2–3 events), a preference center, and game-like mechanics with clear value.

- Activation: export segments to ad platforms via APIs (CAPI, EC), email and push, and web personalization (feature flags).

- Security: encrypt in transit and at rest, enforce access controls, log activity, and run regular audits.

Quality checklist

- Share of profiles with valid contact >35% for repeat businesses (e-com, subscriptions).

- Consent coverage 75%+ on key pages driven by honest value exchange.

- Server-side share of events ≥80% for marketing analytics.

- Event schema errors under 2% weekly (automated validation).

Example

An online retailer rolled out a “preference center” and server-side events: opt-in rose from 58% to 72%, lookalike accuracy improved, and CAC fell 11% in eight weeks.

Practice 2: Media and measurement in a post-cookie world — Privacy Sandbox, MMM, and incrementality

Goal

Build a resilient buying and measurement system without third-party cookies: a combination of Privacy Sandbox APIs, contextual targeting, retail media, and experimental analytics.

The transition stack

- Contextual targeting 2.0: semantic/page signals, brand suitability, dynamic creative inserts.

- Privacy Sandbox: pilots with Protected Audience API for retargeting and Attribution Reporting API for click/view measurement.

- Retail media: SKU-level campaigns, on-shelf performance, joint promotions, and incrementality sales reports.

- Server-side attribution: CAPI, Enhanced Conversions, event de-duplication.

- Experiments: geo-lift, holdout, PSA tests.

- MMM: weekly/daily models, Bayesian or regularized (Robyn/LightweightMMM), accounting for seasonality and noise.

90-day plan

- Weeks 1–2: audit pixels/events, launch server-side, enable Consent Mode v2.

- Weeks 3–4: test 2–3 contextual DSPs, set brand suitability, select inventory.

- Weeks 5–6: pilot Protected Audience (retargeting) on 10–15% of traffic; enable Attribution Reporting.

- Weeks 7–8: launch 1–2 retail media networks, calculate SKU-level ROI, publish joint reports.

- Weeks 9–10: run a geo-lift experiment with a control region.

- Weeks 11–12: build a basic MMM on 18–24 months of history; sanity-check against incrementality.

The 3M measurement framework

- Metrics: set your north star (LTV/CAC, margin), secondary (CPx, reach), and diagnostic (frequency, creative score).

- Methods: attribution (CAPI/EC), incrementality (geo/PSA), MMM (long horizon).

- Merge: synthesize conclusions—if MMM suggests +20% budget for a channel and geo-lift sees +8% uplift, scale cautiously until validated.

Privacy Sandbox campaign checklist

- Updated SDKs/browsers for the test audience.

- Separate pilot budget and KPI uplift vs. holdout.

- Respect Attribution API reporting limits (aggregation and delays).

- Log agent/operator hypotheses and outcomes.

Example

A fintech brand ran geo-lift on contextual campaigns: transactions rose +6.4% with CPM down 9% vs. interest targeting. MMM confirmed a 7–9% sales contribution. Decision: shift +15% budget into contextual and retail media while maintaining Protected Audience tests.

Practice 3: AI agents in marketing — from pilot to operating system

Goal

Cut manual work by 30–50%, accelerate the experimentation cycle, and lift ROI using autonomous agents across ad platforms, creatives, and CRM.

Agent architecture

- Context: access to the brief, brand constraints, audiences, budgets, and historical data.

- Tools: ad platform APIs, BI, A/B platforms, generative models, and a feature store.

- Memory: a vector store for hypotheses/experiment results; a decision log.

- Procedures: Plan, Act, Observe, Learn — the PAOL loop.

- Guardrails: budget caps, blacklists, stop-words, brand-safety rules, and mandatory human review for high-risk actions.

Pilot: 4 scenarios in 6 weeks

- Creative agent: generates 5–10 variants, tests headlines/visuals, aggregates CTR, CVR, and scroll-depth metrics.

- Bidding agent: tunes bids/frequency within pROAS/CPA limits, adjusts by time/geo.

- CRM agent: personalizes email/push using zero-party preferences, optimizes frequency by LTV.

- Analyst agent: produces daily digests, detects anomalies, recommends experiments.

KPIs and controls

- Experiment velocity: +50–200% weekly iterations without quality loss.

- Share of automated edits: 30–60% of campaign changes.

- Financial metrics: pROAS, CAC, margin; protective “stops” on deviations.

Safety procedures

- RBAC access to APIs and sandboxed environments.

- Full action logging and change rollback.

- Content policies and a brand lexicon (no restricted topics, no misrepresentation of offers).

- Model drift testing and regular recalibration.

Mini-prompt template for a creative agent

Context: campaign goal X, audience Y, brand constraints Z. Generate 10 headline variants and 5 visual directions in styles A/B/C. For each, state the hypothesis and target KPI. Follow the brand lexicon and legal requirements.

Typical pilot results

For an e-commerce brand, average CTR rose 18% and CPA fell 12%. Time from hypothesis to result dropped from 7 days to 2.

Practice 4: Ethical data collection and testing with mobile proxies

Goal

Enable legitimate, resilient market-signal collection, ad checks, and product QA amid tighter privacy and anti-bot policies.

Where mobile proxies are indispensable

- Ad verification: validate impressions/creatives/bids across regions and mobile networks.

- Price intelligence: monitor price/availability dynamics on marketplaces.

- ASO and app stores: simulate mobile users to verify rankings, reviews, and regional differences.

- Localization and UX: QA mobile flows, language packs, and in-app A/B tests.

Legal and ethical guardrails

- Comply with laws and platform terms. Respect robots.txt, rate limits, and avoid collecting PII without consent.

- Use only legitimate providers with documented status and participant consent.

- Maintain logs, conduct DPIAs (privacy impact assessments), and KYC your provider.

Technical plan

- Architecture: headless browsers (Playwright/Puppeteer) + rotating mobile IPs + anti-detect (authentic device fingerprints, no identity spoofing).

- Rotation: change IP/ASN on schedules or triggers (rate-limit, CAPTCHA), add human-like pauses.

- Validation: compare to a control panel, log HTTP responses, capture screenshots.

- Anti-bot: solve CAPTCHAs via partner services or approved APIs; avoid prohibited bypasses.

- Data schema: normalized events (time, geo, network, URL, element, price), quality checks, deduplication.

Resilience checklist

- Farm unique user-agent profiles for target devices.

- Back-off strategy for 429/403 errors.

- Alerting on suspicious anomalies (sudden price spikes, placement outages).

- Ethics report and proxy provider SLA review.

Example

A delivery platform used mobile proxies to uncover regional price differences at a competitor (5–12%). Adjusting price and promos lifted orders by +9% in a month.

Practice 5: Server-side analytics and conversions — the bedrock of accurate decisions

Goal

Eliminate event loss from blockers and cookie limits, improve signal quality to ad platforms, and connect marketing to revenue.

Components

- Server-side tagging: ingest, cleanse, normalize, and route events.

- Identity: user_id, hashed email/phone, device hints, deduplication.

- Conversions: CAPI, Enhanced Conversions, and offline conversions per channel.

- Consent: correct Consent Mode signals attached to every event.

Step-by-step

- Define your event schema: required fields, types, and rules.

- Deploy a server container and a protected endpoint (TLS, WAF).

- Migrate key pixels to server-side; keep client-side as backup only.

- Connect your DWH to server events and conversion uploads to channels.

- Build verification: share of “unaccepted” events, duplicates, delivery lag.

Readiness checklist

- 90%+ of revenue events flow through server-side.

- CRM vs. analytics order variance under 3%.

- An SLA dashboard for events (latency, volume, errors) is in place.

Example

A SaaS company implemented Enhanced Conversions and CAPI: matched conversions rose 21%, optimization shifted to LTV, CAC fell 8%.

Practice 6: Creative engineering with AI — turning ideas into revenue

Goal

Systematically boost creative performance using data and generative models within safe templates.

The ICE-CREO method

- I — Insights: mine reviews, support chats, NPS, and search queries for insights.

- C — Constraints: brand rules, legal red lines, and approved wording.

- E — Experiments: A/B/n plan, significance criteria, duration.

- CREO — Concepts, Renders, Edits, Orchestrate: generate concepts, render formats, make micro-edits, and orchestrate tests via an agent.

Step-by-step

- Compile a bank of customer messages, objections, and “moments of truth.”

- Prepare prompt templates by format: Stories, banners, CTV, landing sections.

- Enable agent orchestration: upload variants to platforms, control test frequency and budget.

- Adopt a “creative score” combining CTR, scroll, attention, and post-click engagement.

Checklist

- Maintain 20–30 active variants per major audience.

- Map attribution signals for each format (including server-side).

- Connect your brand codebook and lexicon to generation tools.

Example

A marketplace ran daily micro-tests of creatives: average creative score rose 24%, creative-driven ROAS contribution +13%.

Practice 7: An experimentation culture — deciding under uncertainty

Goal

Build a system where contested hypotheses are resolved quickly and objectively, and budgets align with proven incrementality.

The SPICE framework

- S — Scope: state the hypothesis and causal mechanism.

- P — Power: ensure sample size/budget sufficiency.

- I — Instrumentation: configure events and tracking.

- C — Control: establish a control group/region.

- E — Evaluation: compute uplift and confidence intervals, then decide.

Step-by-step

- Run a weekly experimentation council: backlog hypotheses with impact scoring.

- Prioritize with tags: low risk/high impact moves forward within the quarter.

- Design templates: A/B, geo, PSA, switchback.

- Keep a single experiment book (vector store accessible to agents).

Checklist

- Run at least 2–3 valid experiments per week on the key product line.

- Automatic notifications on completion and loading results into BI.

- The “agent knowledge base” expands every sprint.

Example

An edtech company adopted SPICE: the share of “winners” rose from 22% to 31%; overall quarterly revenue uplift +4.7% with the same budget.

Common mistakes: what not to do in 2026

- Expecting MMM “magic” without experimental validation—risk of over-investing.

- Ignoring Consent Mode v2—you’ll lose attribution quality and face compliance risk.

- Doing “gray” scraping without ethics or rights—expect blocks and reputational damage.

- Launching agents without guardrails—uncontrolled spend and brand violations.

- Believing retail media “works by itself”—without SKU analytics and incrementality you’ll overstate impact.

- Delaying server migration—competitors are already sending better signals.

- Thinking “contextual = 2008”—modern models and signals dramatically improved accuracy.

Tools and resources: what to use and where to build skills

Data and activation

- CDP: Segment, mParticle, Bloomreach, RudderStack, or custom builds on BigQuery/Snowflake/Databricks.

- Consent/CMP: OneTrust, Didomi, or built-in CMPs in major CMSs.

- Server-side: GTM Server-Side, Cloudflare Workers/Zaraz, custom Node/Go solutions.

Attribution and measurement

- CAPI/EC: conversions for major platforms.

- MMM: Robyn, LightweightMMM, customized PyMC3/Prophet.

- Experiments: Eppo, GrowthBook, or custom frameworks on feature flags.

AI agents and generation

- Orchestration: LangChain/agents, custom schedulers, MLOps stacks.

- Creatives: image/video generative models, editors with brand safeguards.

- Vector DBs: for agent memory and knowledge.

Quality and external data collection

- Mobile proxies: providers with KYC, management panels, rotation.

- Testing frameworks: Playwright, Puppeteer, Appium.

- Ad verification: DoubleVerify, IAS, Moat.

BI and visualization

- Looker, Power BI, Tableau, Metabase, Superset.

Case studies and results: winning in the new reality

Case 1: E-commerce shifts to first-party 2.0

Challenge: retargeting quality fell and CAC rose amid tracking loss. Actions: implemented Consent Mode v2, server-side, CDP, and zero-party surveys. Results in 12 weeks: opt-in +14 pp, users with contact data +19%, CAC -11%, LTV +7% via personalization. Payback in 4.5 months.

Case 2: Fintech, experiments and MMM

Challenge: unclear contribution of contextual and CTV. Actions: geo-lift tests across six regions, 24 months of MMM, server-side conversions. Result: confirmed contextual uplift +6–9%, CTV +3–5%. Budgets reallocated, pROAS +9% for the quarter.

Case 3: Marketplace, AI agents for creatives

Challenge: slow creative iteration. Actions: creative agent + test orchestration. Result: +22% CTR, -13% CPA, cycle time cut from 5 days to 36 hours.

Case 4: Logistics, mobile proxies and competitor monitoring

Challenge: region-based competitor pricing. Actions: ethical mobile IP collection, normalization and QA, anomaly alerts. Result: pricing adapted in nine regions, orders +9%, NPS +2.1 points.

FAQ: 10 deep questions about 2026

1. Do we need to drop all cookies immediately?

No. First-party cookies remain vital for sessions and on-site personalization. What’s going away: third-party cookies and cross-site tracking. Strategy: lean on first-party/zero-party data, Consent Mode, server-side attribution, and Privacy Sandbox APIs.

2. Will Topics/Protected Audience replace “old” retargeting?

Not entirely. Mechanics and coverage change. But the mix of Protected Audience and strong first-party segments already works—especially when supported by server-side signals and experimental validation.

3. Should small businesses invest in MMM?

If you have stable spend and 12–18 months of history, yes—in lightweight forms. Always validate takeaways with geo experiments and watch for seasonal drift.

4. Which roles will AI agents replace first?

Partially: routine media buying, creative iteration, reporting, and anomaly monitoring. Humans keep business logic, brand stewardship, and strategy.

5. Is it risky to grant agents access to ad accounts?

It is—without limits. Use sandboxes, daily caps, tiered access, full logging, and rollback. Add checkpoints for major changes and human approval.

6. How should we measure retail media impact?

Through incrementality (holdout/geo), platform post-campaign reports, and MMM. Separate brand effects from demand cannibalization.

7. Are mobile proxies a risk?

They are if you break rules. Work with legitimate providers, comply with platform terms, don’t collect PII without consent, document DPIAs, and respect rate limits.

8. What’s the top KPI in 2026?

Not a single one but a hierarchy: profit/margin and LTV/CAC as the north star; then pROAS/CPA; with diagnostic reach/frequency/attention.

9. What if an agent produces questionable creatives?

Enforce mandatory human review for sensitive categories, maintain a brand lexicon, stop-lists, tone rules, and automatic keyword-trigger filtering.

10. How to get ready for the new year in 60 days?

Enable Consent Mode v2, move critical events server-side, pilot contextual and Protected Audience, collect zero-party data, set up at least one geo experiment, and feed conversions to channels via APIs.

Conclusion: your 2026 action plan

We’re entering an era where discipline wins. Not the loudest, but the teams that systemize data, agents, and experiments. The post-cookie stack isn’t a chain of hacks; it’s a new operating model. Next steps are simple—but demand focus.

90-day plan

- Data: enable Consent Mode v2, move 80%+ of events server-side, and implement a basic CDP/profile store.

- Media: test Context 2.0 and Protected Audience, start retail media, and run a geo-lift.

- Agents: deploy creative and bidding agents with guardrails; log decisions.

- Experiments: stand up a council, a backlog, and 2–3 valid tests per week.

- Ethics & proxies: KYC your provider, document a DPIA, set limits and reporting.

By 2026 you should have a system where data is lawful and rich, agents are fast and controlled, and measurement is causal. Then market changes become background noise—and you keep growing steadily.