AI Bot Detectors in 2026: How They Work—Without Breaking Real Users’ UX

Contenido del artículo

- Introduction: why this matters and what you’ll learn

- Basics: foundational concepts (for newcomers)

- Deep dive: advanced aspects of the field

- Practice 1: design “legitimacy by default” and reduce false blocks

- Practice 2: tuning cloudflare turnstile, human, and datadome for low friction

- Practice 3: ethical testing and sandboxes to validate anti‑bot defenses

- Practice 4: behavioral signals and a frictionless ux

- Practice 5: the role of mobile networks and proxies—what changes for defenders

- Practice 6: models, features, and an mlops review of your anti‑bot stack

- Common mistakes: what not to do

- Tools and resources: what to use

- Case studies and results: real‑world applications

- Faq: 10 in‑depth questions

- Conclusion: summary and next steps

Introduction: Why this matters and what you’ll learn

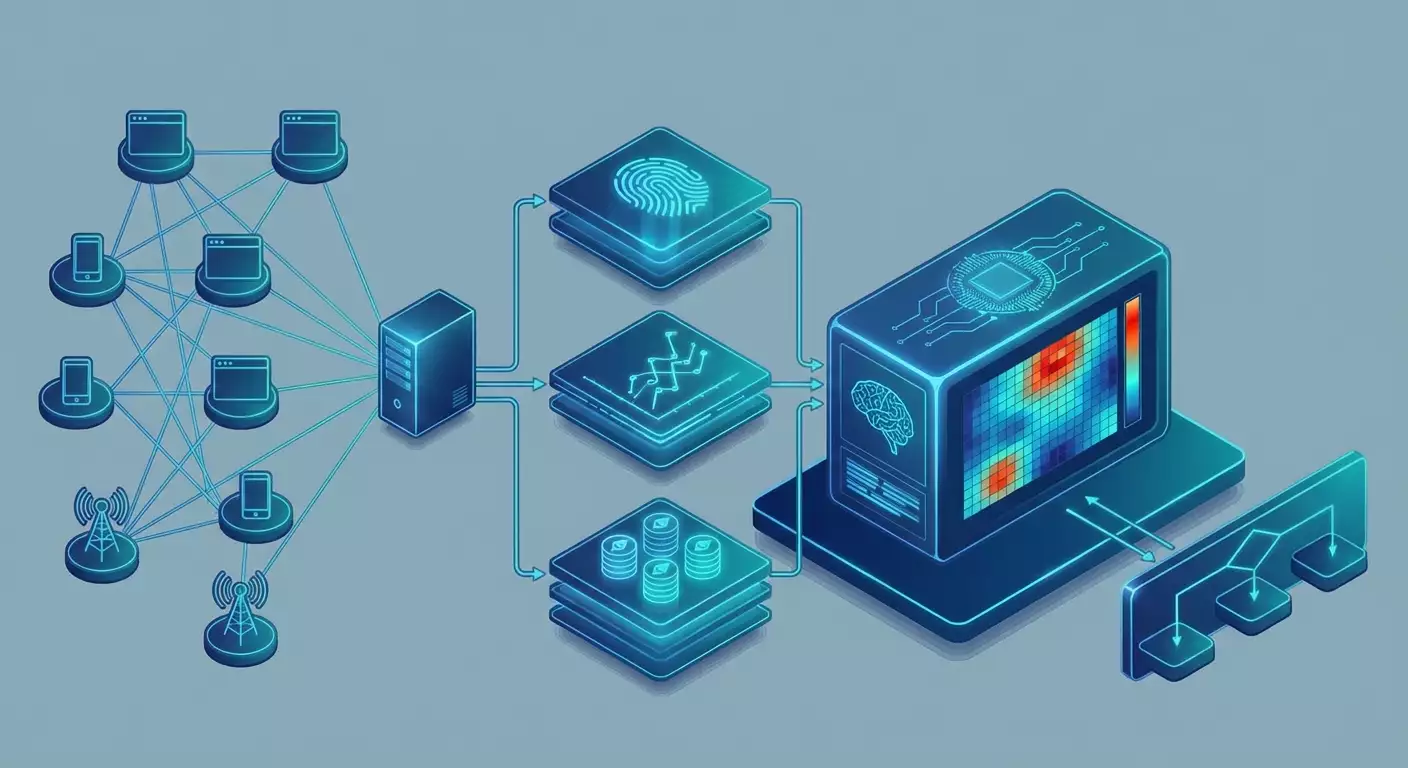

By 2026, the share of non‑human traffic remains at record highs, and digital businesses are still vulnerable to automated abuse. Industry reports from 2024–2025 suggest that close to half of all traffic is automated; roughly a third is malicious, with the rest split between legitimate search crawlers, monitoring agents, and service integrations. In response, defenses have evolved from simple CAPTCHA to multi‑signal behavioral models and device attestation backed by cryptography. Product, security, and growth teams all face the same challenge: protect the business without breaking the experience for real users and approved integrations.

This guide is a field manual for leaders, engineers, and analysts. We’ll unpack how modern AI bot detectors work today—Cloudflare Turnstile, HUMAN (formerly PerimeterX), and DataDome. You’ll learn what fingerprinting and behavioral analytics really mean in 2026, how ML models are built, why mobile networks produce unique signals, and how to run ethical testing. We do not provide or endorse instructions for bypassing defenses. Our goal is to help you reduce risk, minimize false positives, and enable legitimate automation transparently and by the rules.

What you’ll get: a modern taxonomy of signals, practical frameworks for tuning systems and reducing friction, implementation checklists, step‑by‑step sandbox testing procedures, common pitfalls and fixes, a tool stack, and real case studies with measurable results. Let’s dive in.

Basics: foundational concepts (for newcomers)

What bot detection is. Bot management is a set of server‑ and client‑side mechanisms that assign a risk score to each request based on many signals: network, device, behavior, session history, and context. When a threshold is exceeded, the system may allow, apply a friction check (e.g., Turnstile), slow down, require authentication, or block.

Traffic types. Think in three layers: good bots (search crawlers, uptime agents), the gray layer (integration scripts, custom RPA with the site owner’s permission), and bad actors (unauthorized scraping, login attacks, carding, scalping). Each layer is handled by policy, reflected in your security posture, robots.txt, partner agreements, and allowlist profiles.

Signal channels. 1) Network: IP reputation, ASN, geo, port anomalies, TLS fingerprints, RTT and jitter stability. 2) Client: browser properties, font stack, WebGL/Canvas prints, sensor data, time zone, battery state (mobile), platform traits. 3) Behavioral: click rhythm, scrolls, field focus, delays, cursor paths, touch patterns. 4) Historical: session profile, frequency and repeat behavior, conversions. 5) Cryptographic: device/browser attestation and private access tokens.

Decisions and actions. Systems produce a risk score (e.g., 0–100). Threshold‑based rules then apply: allow, trigger an invisible or visible challenge, restrict sensitive operations, or defer a check. The goal isn’t total blocking; it’s risk management—reducing attack impact with minimal friction for real users.

Ethical and legal frame. Lawful automation means honoring Terms of Service, robots.txt, explicit permissions, clear identification of your agents, and privacy‑aware data handling. Any attempt to masquerade as a user without permission can violate policy and law, and lead to blocks and reputational damage. This guide assumes a compliance‑first approach.

Deep dive: advanced aspects of the field

Cloudflare Turnstile in 2026 is a “non‑CAPTCHA” challenge integrated with Cloudflare Bot Management. It can rely on Private Access Tokens (PATs) issued by OS and browser vendors to attest to a legitimate client without revealing identity. Typical flow: 1) a client script collects low‑friction signals, 2) Cloudflare computes risk, 3) if needed the user is silently attested, 4) in harder cases a lightweight challenge appears. The strengths: massive network‑correlated reputation and cryptographic attestation.

HUMAN (formerly PerimeterX) is a mature platform built around e‑commerce and login protection. It fuses client telemetry, behavioral profiles, server labeling, and threat‑intel network effects. It’s optimized for high‑volume attacks like credential stuffing and carding. The product builds long‑lived profiles and can run in “Silent Mode” (no added friction) before escalating to interactive checks when risk is high.

DataDome is a strong anti‑bot provider known for balancing client‑ and server‑side controls and offering broad integrations (edge, SDK, WAF plugins). It leans on ML scoring, IP/ASN reputation, TLS fingerprints, and vertical‑specific heuristics for tickets, marketplaces, and SaaS. A core principle is a continuous feedback loop: validating outcomes against conversions and incidents.

Fingerprinting in 2026 isn’t about one Canvas print—it’s an ensemble of weak signals: font stability, GPU quirks, TLS ClientHello patterns, engine runtime artifacts, sensor frequencies, noise, and more. Modern browsers ship anti‑fingerprinting (Safari, Firefox), while platforms offer private tokens that reduce the need for aggressive signal collection. Defenses rely on cumulative probabilities; single signals rarely decide.

Behavioral analytics captures micro‑dynamics: time distributions between events, tiny trajectory fluctuations, transition context, and action sequences. What matters isn’t “perfectly human movements” (that’s a myth), but consistency with population norms. Accuracy improves with large reference datasets and normalization by device class, locale, and scenario.

ML models are ensembles of gradient boosting, graph models, and neural nets trained on billions of requests. The 2025–2026 trend: privacy‑by‑design—federated learning, differential privacy, and data minimization. In practice, that yields fewer false positives and more predictable outcomes.

Cryptographic attestation strengthens the ecosystem: Private Access Tokens, mobile OS device attestation, and platform integrity signals. This reduces invasive tracking and lets honest clients pass invisibly as long as they stay within the rules—key to low friction.

Practice 1: Design “legitimacy by default” and reduce false blocks

Goal: let real users and approved automations pass seamlessly while attacks get filtered. We’re not bypassing systems—we’re building an architecture defenses can trust.

LEGIT‑6 framework

- Legal: lock down the legal base—ToS, partner agreements, robots.txt, data policy.

- Expose: provide official integration paths—public APIs, webhooks, export channels, dedicated endpoints with separate limits.

- Govern: define automation identity—separate keys, signed requests, stable User‑Agents and metadata, agreed allowlist/verified‑bot status.

- Instrument: ship transparent telemetry—tracing, correlation IDs, session attributes so defense teams see context.

- Tune: co‑tune thresholds and rules with security and product, using data.

- 6: a six‑week improvement cycle—hypotheses, A/B tests, retros.

Step‑by‑step playbook

- Map your traffic: chart user and system flows, classify request types and sensitive operations. Tag sources: browser, mobile app, partner integration, internal agents.

- Official channels: where possible, move integrations from HTML parsing to APIs with OAuth2/tokens. This slashes false positives and improves observability.

- Identification: agree on “verified bot” status for your service agents. Use stable IPs/ASNs or dedicated signatures and keys.

- Trust signals: send correct headers, consistent TLS stack, honest User‑Agent. Avoid needless masking or randomization—detectors penalize instability.

- Risk rules: define policies: what to slow down, where to require extra auth, how to react to suspicious spikes.

- Observability: enable detector logs, tie them to product metrics (conversion, retention), and alert on FP/FN.

Acceptance checklist

- APIs cover ≥80% of integration scenarios.

- Service agents are identified and approved with security.

- Threshold rules are documented with examples.

- Anti‑bot logs are linked to business analytics.

- Accessibility testing for assistive tech is complete.

Practice 2: Tuning Cloudflare Turnstile, HUMAN, and DataDome for low friction

Principle: each product is unique, but the rule holds—richer context and feedback from you drive better decisions. Use observe‑only modes, staged rollout, and vendor co‑tuning.

Cloudflare Turnstile and Bot Management

- Stage 1 — Observe: enable detection without blocking on a traffic slice (e.g., 10–20%). Capture risk‑score distributions by device and country.

- Stage 2 — Signals: validate client script init, stable loading, and no CSP conflicts. Ensure Private Access Tokens are used where available.

- Stage 3 — Threshold: start so legitimate users see a challenge in under 0.5–1% of cases. Add exceptions for known integrations.

- Stage 4 — Actions: define soft reactions for medium risk: slowdowns, re‑checks, or a second factor for critical actions (e.g., saving a payment).

- Stage 5 — Review: weekly reconciliation of FP/FN with support tickets and feedback from product.

HUMAN (PerimeterX)

- Activate long‑lived profiles to dampen rare anomalies and stabilize risk scores.

- Split policies by endpoint: login, cart, and checkout deserve different thresholds and actions.

- Handle carding incidents with dedicated playbooks so you can tighten rules temporarily without hurting other flows.

DataDome

- Leverage SDK and edge flexibility: keep some logic at the perimeter, some in‑app.

- Enable a feedback API—send conversion and cancellation outcomes so the model learns tricky cases.

- Use a safe mode during major frontend releases to avoid a spike in false positives.

Success metrics

- Challenge rate for real users: ≤1% on key pages.

- Login attack reduction: ≥60% with stable auth conversion.

- Page load impact from protection scripts: ≤50–80 ms at p95.

- Support tickets about blocks down ≥30% post‑tuning.

Practice 3: Ethical testing and sandboxes to validate anti‑bot defenses

Why it matters: without reproducible tests, teams either overtighten and lose customers or undertighten and miss attacks. Ethical testing means agreed scenarios in a controlled environment with explicit permission.

SAFE‑RUN step‑by‑step

- Scope: define test goals—throughput, challenge stability, noise resilience, UX impact.

- Authorize: secure written permission and temporary policies from the defender. Set up test domains, keys, and a sandbox.

- Fabricate: build traffic generators that emulate device and network profiles while clearly identifying the test and rate‑limiting activity.

- Evaluate: measure risk scores, challenge rate, latency, and FP share. Compare to target benchmarks.

- Refine: co‑adjust thresholds, exceptions, and feedback mechanisms with the vendor.

Test hygiene checklist

- The test environment is isolated from production or uses a separate domain/key.

- All test agents are clearly identified (headers, query params, tracing).

- Loads are capped to avoid impacting real users.

- Logging includes annotated FP/FN cases with context.

- After tests, rules are reverted and temporary exceptions closed.

Practice 4: Behavioral signals and a frictionless UX

Idea: strong protection shouldn’t be felt. Collect behavioral signals in a minimally invasive way so models perform while UX stays delightful.

Technical tips

- Event quality: send aggregated metrics instead of raw coordinates when privacy allows—histograms of delays, interaction frequencies, session durations.

- Telemetry stability: watch for event loss, batching, and offline retransmit (mobile).

- Accessibility: telemetry must not break assistive tech. Test with screen readers and keyboard‑only navigation.

- Friction gradient: apply layered responses—from invisible scoring to soft challenges, and only then hard blocks.

Step‑by‑step integration

- Define the minimal behavioral signal set for your model.

- Collect via the vendor SDK or a lightweight in‑house layer with aggregation.

- Run an A/B test: no signals vs. with signals.

- Assess impact on FP/FN and Core Web Vitals.

- Tune telemetry volume and frequency.

Practice 5: The role of mobile networks and proxies—what changes for defenders

Context: mobile networks with dense CGNAT, frequent IP churn, unstable latency, and battery‑saving device profiles create unique patterns. Detectors take this into account to avoid penalizing real users.

What to know

- Network anomalies: many users share a public IP—normal for mobile. IP reputation is less informative without context.

- Device signals: mobile OSs provide rich, privacy‑preserving integrity signals. Where private tokens exist, honest mobile clients pass with almost no friction.

- About proxies: mobile proxies are acceptable for testing and monitoring only with explicit consent and policy compliance. Masquerading as a “typical user” in production without permission invites escalation, blocks, and legal risk. Modern detectors spot inconsistent fingerprints and patterns—these schemes don’t scale.

Ethical use cases

- Cross‑network performance and availability tests from approved devices/proxies marked as test traffic.

- Diagnosing regional issues (e.g., a single carrier) in a sandbox.

- Validating UX across device classes without hiding from defenses.

Compliance checklist

- Proxy provider operates with explicit participant consent and transparent policies.

- Test runs stay within permissions, at reduced rates, and with clear identification.

- All sessions are logged and auditable.

Practice 6: Models, features, and an MLOps review of your anti‑bot stack

Why it’s critical: models drift. New browsers emerge, device patterns shift, traffic evolves. Without a regular MLOps cycle, accuracy degrades.

ML‑ANTIBOT framework

- Data: store features with versioned schemas, sources, and transforms. Ensure fair coverage by geo and device class.

- Labels: derive labels from confirmed outcomes—successful purchases, valid logins, flagged fraud. Avoid tainted labels.

- Features: use an ensemble of weak signals—network, cryptographic, subtle client and behavioral. Legacy single prints (e.g., Canvas alone) aren’t enough.

- Train: retrain on a rolling window; monitor drift and PSI (Population Stability Index).

- Serve: keep p95/p99 latency in check (target under 100 ms for a decision). Cache non‑sensitive outcomes.

- Explain: build interpretable reports—feature impact and where FP risk is concentrated.

90‑day review plan

- Audit features and privacy leakage.

- Recalibrate thresholds for seasonality.

- Compare vendor and in‑house models.

- Update incident playbooks and support runbooks.

Common mistakes: what not to do

- Running everything at max: aggressive rules without data crush conversion and flood support.

- Masquerading: randomizing User‑Agents, stealth proxies, and evading signals escalate defenses, trigger ASN blocks, and hurt your reputation.

- Ignoring official APIs: HTML scraping instead of supported interfaces leads to fragility and clashes with defenses.

- No incident plan: attack spikes are inevitable. Without playbooks, recovery drags on.

- Skipping accessibility tests: protections that conflict with assistive tech damage loyalty and risk non‑compliance.

- Storing excess data: extra personal fields raise privacy risk without boosting accuracy.

Tools and resources: what to use

Commercial solutions

- Cloudflare Bot Management and Turnstile for edge integration and lightweight challenges.

- HUMAN (formerly PerimeterX) for e‑commerce and login protection with behavioral profiling.

- DataDome for a flexible balance of server/client defenses and fast deployment.

- Arkose Labs for stronger challenges when stakes are high.

Open and infrastructure stack

- Logging and tracing: OpenTelemetry; ClickHouse/BigQuery for event analytics.

- ML orchestration: Feature Store, MLflow, Kubeflow for versioning and monitoring models.

- Test runners: load‑test frameworks and traffic replay in a sandbox.

- Privacy frameworks: differential privacy, field anonymization, data minimization policies.

Org practices

- Incident playbooks: carding, credential stuffing, content scraping.

- Allowlist/verified‑bot procedures for service agents and partners.

- Quarterly joint reviews with vendors informed by business data.

Case studies and results: real‑world applications

Case 1: Marketplace checkout

Challenge: a surge in carding and more challenges at payment. Approach: HUMAN ran in silent mode for two weeks to collect risk distributions by device; added payment‑gateway signals (AVS/CVV outcomes); segmented rules by endpoint (login, cart, checkout). Result: carding down 72%, legit‑user challenge rate from 1.8% to 0.6%, payment conversion +1.1 pp, support tickets −28%.

Case 2: Media and content scraping

Challenge: mass unauthorized scraping and lower CPM due to abnormal traffic. Approach: DataDome at the edge; launched an official content API for partners with labeled keys and rate limits; degraded functionality for suspicious agents instead of hard blocks. Result: unauthorized scraping down 65%, “good” bots up to 22% of traffic, partner complaints nearly zero, CPM recovered by 15%.

Case 3: SaaS platform—enterprise FP issues

Challenge: customer corporate proxies produced unstable signals, triggering more challenges. Approach: Cloudflare Turnstile plus Private Access Tokens; allowlist by customer ASNs; SSO with stepped‑up auth for sensitive actions. Result: false challenges −40%, login p95 unchanged, NPS +6 points.

Case 4: Internal service agents

Challenge: monitoring robots occasionally got blocked after frontend releases. Approach: register agents as verified bots with dedicated keys, stable User‑Agent, and a control panel to observe their traffic. Result: 0 incidents for the quarter—predictable, SRE‑friendly operations.

FAQ: 10 in‑depth questions

1. Can we rely only on network signals and skip client telemetry?

Not if you want accuracy. Mobile networks, CGNAT, and IPv6 segmentation make IP reputation noisy. Balance the approach: lightweight client telemetry plus cryptographic tokens and context.

2. How do we minimize impact on Core Web Vitals?

Load SDKs asynchronously, use small bundles, cache decisions, and avoid render‑blocking. Keep added latency under 50–80 ms at p95; otherwise revisit signal collection.

3. Do we need our own anti‑bot on top of a vendor?

Often, custom rules on top of a vendor are enough: segmented thresholds, business context, allowlists. Build an in‑house ML model only at scale and when you have MLOps maturity.

4. How do we handle false positives?

Catalog FP cases, link them to conversions and support, run weekly reviews, and add segment‑level exceptions. Favor soft actions before blocks.

5. What if a partner uses nonstandard automation?

Move them to an official API, issue separate keys and limits, and define a policy profile. Insist on transparent identification—it helps everyone.

6. Do mobile proxies make traffic look like a real user?

No. Modern detectors check consistency across many signals and spot masking quickly. Use proxies only for approved tests in a sandbox.

7. How do we balance privacy and accuracy?

Use data minimization, aggregated behavioral metrics, private access tokens, and clear notices. Accuracy improves through context and feedback, not excess personal data.

8. Is CAPTCHA dead?

Classic CAPTCHAs are less effective and harm UX. They’re being replaced by invisible risk scoring, behavioral models, and cryptographic attestations. Stronger challenges still have a place—surgically.

9. How do we prepare for attack peaks?

Keep playbooks ready: temporarily tighten rules for specific endpoints, enable stronger challenges, and step up authentication. Prep communications for support and frontline teams.

10. How do we respond to model drift?

Monitor PSI and key metrics, retrain quarterly, validate on fresh samples, and align thresholds with seasonality. Alert on abnormal FP/FN trends.

Conclusion: summary and next steps

AI bot detectors in 2026 blend network reputation, rich client telemetry, behavioral analytics, and cryptographic attestation. The Cloudflare Turnstile, HUMAN, and DataDome ecosystem is powerful, but it shines only when paired with your architecture and processes. Winners aren’t the harshest—they’re the smartest at managing risk: offering a legal, transparent path for real users and partners while making life harder for adversaries.

Your next steps: 1) map traffic and sensitive operations; 2) agree on verified‑bot policy and move integrations to APIs; 3) enable observe‑only mode and tune; 4) run ethical sandbox tests; 5) schedule 90‑day ML reviews; 6) refresh incident playbooks. Iterate and measure: fewer attacks, less friction, more trust. That’s the path to durable growth in a world where bots are half the Internet—and your business still needs to stay human, fast, and safe.