What is a URL Slug and How to Make It SEO-Friendly

Nowadays, website addresses aren't just a string of letters and numbers; they're an important part of promoting a site as a whole, building loyalty among the target audience. This is the solution that helps people and search engines understand what a page is about without even visiting it. Imagine searching for an article about marketing online and seeing a link like https://example.com/?p=12345 . It looks like a jumble of letters, symbols, and numbers that tell you nothing. Would you want to click that link? Most likely not, because you don't understand what you'll see on this page. However, next to it, you see another URL: https://example.com/blog/kak-uluchshit-sayt-dlya-poiska. Even a quick glance at the title will be enough to understand what exactly this page is about. And, with a high degree of probability, you'll click through to this page.

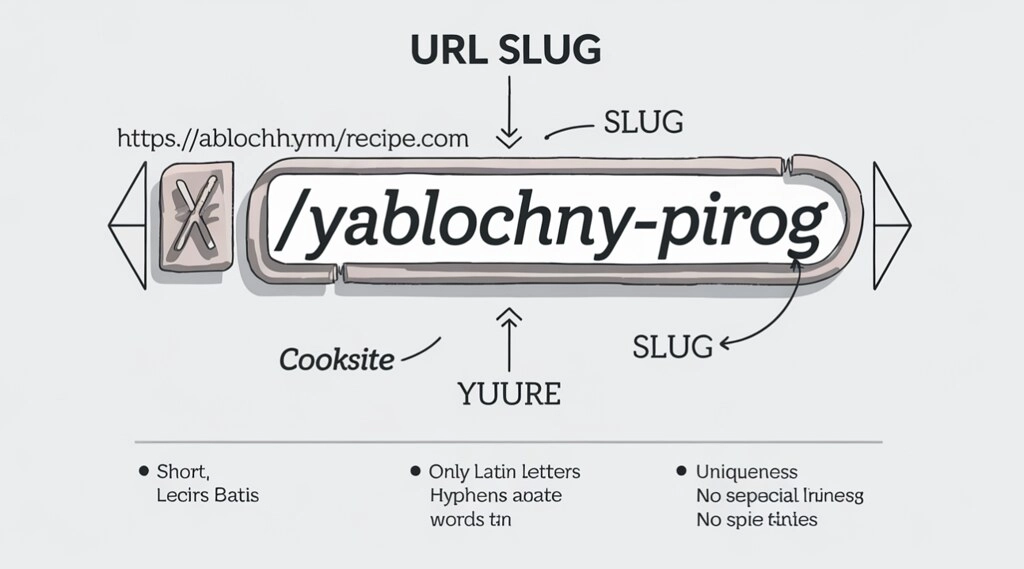

In this example, we've shown what a URL slug, or as it's often simply called, is—a simple part of a page's address that can make your site more visible in search results and distinguish it from similar sites. Here, we're talking about a short phrase that appears after the last "/" character in the address bar. It indicates what the page is about and makes the link more understandable to both the audience and search bots. A slug typically consists of simple words written in lowercase, separated by hyphens, and without unnecessary characters.

Why is this important? Firstly, such a slug helps search engines like Google and Yandex better understand the page and rank it higher in search results if the words in it match people's queries. Secondly, it will be truly convenient and informative for visitors: the link is easy to read, memorable, and clicks more often. Statistics show that such a solution actually increases conversions by at least 20-30%. Thirdly, a proper URL slug simplifies website operation, avoids confusion with similar pages, and helps search engines find them faster.

And one of the most important tasks in this matter will be to create a slug that is as correct and informative as possible. It should not contain Russian letters or unnecessary words, as this can make your page less visible in search results. To prevent this from happening, it's important to thoroughly understand this issue. This is precisely what our article today will focus on. Specifically, we'll now learn what a URL slug is and what role it plays in SEO.

We'll detail the tools that can help identify existing errors in URL slug formation. We'll highlight a number of issues that users most often encounter when writing slugs. We'll also provide a number of practical recommendations to help you optimize your website's page URLs. The information provided will help you create a truly high-quality URL Slug that will satisfy both regular users and search engine bots.

We also recommend studying the material "Dynamic URL: What is it and how to use it".

What is a URL Slug?

A simple, real-life example will help you understand what a URL Slug is. Imagine you're searching for an apple pie recipe online. A good URL for this page might look like this: https://mysite.com/recipes/kak-sdelat-vkusnyy-yablochnyy-pierog. Here, the slug is This is the entire portion of your URL that comes after the last "/," i.e., "how-to-make-a-delicious-apple-pie." It briefly describes what the page is about and helps you immediately understand what awaits you after landing on it.

And this raises a completely natural question: why don't we use the entire URL "recipes/how-to-make-a-delicious-apple-pie"? Because "recipes" is simply a section of the site, perhaps a folder with recipes or a category in the menu. But here we're talking specifically about the URL Slug, that is, a special tag for one specific page, provided within the section. It makes the address clear, convenient, and easy to understand: people can immediately see what the material will be about, and search engines quickly understand the topic and show the page to the right users.

Another example: if you have an online clothing store, a good product URL might be: https://shop.com/odezhda/letnyaya-futbolka-dlya-muzhchin. In this case, the slug is "letnyaya-futbolka-dlya-muzhchin," a phrase that clearly describes the product—a summer t-shirt for men. This approach not only simplifies search but also increases trust with the audience and search bots: the link looks neat, without unnecessary numbers or characters. This means people are more likely to click it. As a result, the site becomes more user-friendly for everyone, and pages are more likely to appear at the top of search results.

What role does URL slug play in SEO promotion?

When you see a link like https://site.com/page?id=98765, it looks strange and unclear: what kind of page is it, and can it be trusted? People usually don't click on such URLs; I consider them suspicious. Moreover, search engines don't like them either, as they have a hard time initially understanding what's going on.

It's much better if the URL looks like this: https://site.com/blog/kak-vybrat-velosiped-dlya-goroda . It's immediately clear that this is a section of the site called a blog, and the page itself is dedicated to choosing a bicycle for city use. This kind of URL helps both people and search engines quickly understand what's inside. This means the site will be easier to find, and the page will ultimately rank higher in search results.

Why does this work in practice? There are several aspects worth considering:

- A clear and logical structure. If the site's address is simple, search engines find the pages they need faster and display them in higher positions. For example, https://shop.com/catalog/zimnie-botinki. Here, it's immediately clear that the store's catalog is about winter boots.

- Keywords in the address. If the slug includes important words, such as "smartphone-repair," search engines understand that the page is optimally suited for the query "smartphone repair." This helps it rank highly for relevant topics.

- Usefulness for people. A readable address inspires trust, is easy to remember, and is easy to forward to a friend. Experience shows that people are more likely to click on such links, which means the site gets more visitors and steadily moves up the search results.

We hope you now understand the importance of using a correct URL slug on your website pages and know that your site will only benefit from it: it will become more visible and convenient for both real users and search engine bots.

What tasks should you use a URL slug for?

A URL slug is more than just a fragment of a website address; it's a true assistant for your website and its visitors. Here are just a few clear examples of its importance and the tasks it can solve:

- It uniquely identifies each page. A slug is like a name for the page. For example, if you have a travel website, the address https://travelblog.com/italy/rome-dostoprimechatelnosti immediately identifies it as a site about Rome's attractions. This URL slug helps avoid confusion among similar pages and quickly find the information you need.

- It helps your site rank higher than competitors in search results. If the slug includes relevant keywords, such as "remont-noutbuka" for a page about laptop repair, search engines understand that the page is relevant for that query. This increases the chances that your site will be seen by more people.

- It makes your site more user-friendly. We've already discussed how short and clear URLs make it easier for users to remember and find them again. Everything is clear here: the name https://shop.com/odezhda/zimnyaya-kurtka will be much easier to understand and remember than a long link with a jumble of letters, numbers, and symbols.

- Reduces technical errors. Simple slugs without unnecessary characters and spaces are less likely to cause problems for search robots. This means pages are more quickly included in search results and are not lost due to errors.

- Adds context and meaning. A URL slug hints at what the page is about, even if you haven't opened it yet. For example, the address https://recipes.com/vypechka/shokoladnyy-tort immediately tells us that when you visit this page, you'll see a chocolate cake recipe. Search engines will also use this name to better understand the site's content and display it in response to relevant user queries.

This means that a slug makes your site more convenient for bots and humans, helping you attract more visitors and steadily move up the search results.

Getting to Know the Tools for Detecting URL Slug Errors

Today, the IT market offers a wide range of tools that can help you identify URL slug errors. Specifically, these include:

- Google Search Console.

- Netpeak Spider.

- Screaming Frog SEO Spider.

- Ahrefs.

Let's take a closer look at each of these products now.

Google Search Console for Generating High-Quality URL Slugs

Google Search Console is a free yet very useful service from Google that helps website owners monitor how their pages appear in search results. This tool is especially useful if you want to ensure your URLs and their slugs are as clear, user-friendly, and easily indexed by bots as possible.

When you add your site to Google Search Console, the service shows which pages are already included in search results and which are not yet visible to users. In the "Indexing Issues" section, you can find more information about the affected pages. You can see why some URLs aren't appearing in search results. These could be 404 errors, incorrect redirects, or technical issues with the URL itself. Among other things, it will also check the URL slug: if it finds that it contains extra characters, spaces, Cyrillic, or overly long phrases, the site will notify you, allowing you to fix the problem. Otherwise, search engines may not understand the page and show it to people.

Let's say you have a recipe website and you've created a page with the address https://cooksite.com/recipes/letniy-salat-s-tomatami-i-kuritzey-2025. If the slug is too long or contains special characters, Google Search Console will show that the page is poorly indexed. You can quickly find the error and change it to a shorter, more understandable one (for example, "letniy-salat"). Don't forget to check the impact on your page's visibility after making adjustments.

The service also helps you track which keywords appear in your URLs and how closely they match user queries. For example, if a page's slug is "repair-smartphone," and people are searching for the service "smartphone repair," Google Search Console will show that this page receives more impressions and clicks. This means that a well-chosen URL slug helps your site rank higher in search results and attract new visitors.

Another useful feature is a duplicate URL error check. If you have multiple pages with similar slugs, such as "recept-salatа" and "recept-salatа-2025," the service will tell you that this could confuse search engines and lower your site's rankings. You can consolidate such pages, set up proper redirects, and make your site structure more understandable.

Google Search Console also shows how often users click on your links in search results (CTR) and which pages receive the most traffic. Experience shows that if a page slug is simple and contains the right words, people are more likely to choose your site. This helps you understand which URLs perform best and then optimize other URLs based on this information.

In short, Google Search Console is an indispensable tool for those who want to make their URLs and slug as user-friendly as possible for both humans and search engines. It helps you quickly find and fix errors, improve your site structure, and improve your search rankings.

Netpeak Spider for URL Slug and Site Structure Optimization

Netpeak Spider is a modern tool designed for a comprehensive SEO audit of your site. It's extremely useful when you need to make your URLs and slugs as clear and user-friendly as possible for search engines and users. It can help you quickly find and fix errors that prevent your site from ranking high in search results and ensuring a steady flow of visitors.

When you run Netpeak Spider, the program scans all pages of your site and displays a detailed report for each address. This information shows you which links are broken, where there are issues with the URL structure, and you can quickly find duplicate pages. This means you can quickly identify anything that could confuse search engines and lower your site's ranking. This is especially important for slugs. You know, if they contain extra characters, spaces, Cyrillic, or overly long phrases, it's bad for your overall website promotion. But don't worry too much: Netpeak Spider will immediately highlight such errors and suggest what needs to be corrected.

For example, if you have a website about appliance and electronics repair, and the page address is https://remontsite.com/uslugi/remont-smartdona, Netpeak Spider will check the slug for extra characters, double hyphens, or capital letters. If an error is found, the program will show it in the report, and you can quickly change the name to the correct one, i.e., "smartphone-repair." This will help search engines correctly understand your page and improve its rankings.

Another convenient feature is that Netpeak Spider also helps identify technical issues that prevent page indexing. For example, if a slug contains special characters like %, &, +, or spaces, the program will show that such addresses are poorly interpreted by search robots and can lead to 404 errors or overall traffic loss. You can quickly fix any identified issues, thereby ensuring high availability of your pages for search engines and visitors.

Another useful feature of this tool is the analysis of duplicate URLs (slugs). If you have multiple pages with similar URLs, Netpeak Spider will warn you that this could be leading to link fragmentation and a drop in your site's rankings. It might be worth consolidating these pages, setting up proper redirects, or making your site structure more logical and user-friendly.

Netpeak Spider also lets you track how errors change after making edits. You can rerun the scan and ensure that all slugs are clean, short, and contain the right keywords. This is especially important for large sites where manually checking every page is physically impossible.

If you want to make your URLs and slugs as user-friendly as possible for both humans and search engines, consider Netpeak Spider. You'll see how quickly and easily you can identify existing errors and fix them, thereby improving your site structure and boosting your search rankings. These are the factors that will guarantee increased traffic and customer growth.

Screaming Frog SEO Spider for URL Slug Analysis and Improvement

Screaming Frog SEO Spider is a powerful program designed for installation on a computer. It allows website owners and SEO specialists to quickly find and correct errors in page addresses, including slugs. This tool is especially useful for those who want to make their website structure more understandable and all URLs clean and easy to find.

When you run Screaming Frog, the program scans the entire site and collects detailed information on each individual page. The final report shows the URL length, the presence of redirects, server response codes (e.g., 200 for everything OK, or 404 for the page not found), and also identifies duplicate addresses and content. This is important for SEO in general. It's not just that long, confusing, or duplicate URLs can hinder a site's search rankings, but also for other reasons.

In terms of URL Slugs, Screaming Frog is especially useful because it allows you to quickly find pages with incorrect slugs. For example, if you have an online electronics store with a product URL like https://shoptech.com/products/12345?ref=abc, the program will show that such a slug is uninformative and can discourage visitors. Screaming Frog can also identify other errors, including URL slugs that are too long, or that contain special characters, spaces, capital letters, or Cyrillic. You can quickly filter out such pages and change their URLs to simpler and more understandable ones, for example, https://shoptech.com/products/besprovodnye-naushniki.

Another important feature of this service is redirect analysis. Screaming Frog will show if a page with an incorrect slug redirects to another one and help ensure that all redirects are configured correctly. This is especially important when performing a large-scale site structure change or URL slug optimization: you can avoid traffic loss and indexing errors.

The program also helps identify duplicate URLs and content. For example, if you have two pages with similar slugs, such as https://shoptech.com/products/smartfon-2025 and https://shoptech.com/products/smartfon-2025-new, Screaming Frog will show you that this could confuse search engines and dilute link equity. It will also suggest merging such pages, setting up canonical tags, or redirects to make the site structure more logical and user-friendly.

Screaming Frog allows you to export all detected errors to a spreadsheet so you can address them step by step. This is especially useful for large sites with hundreds or thousands of pages, where manually checking each slug is impossible. The program also integrates with Google Analytics and Google Search Console, allowing you to track how URL slug changes impact your site's traffic and rankings.

This means that Screaming Frog SEO Spider is an indispensable tool for those who want to make their URLs and slugs as user-friendly as possible for both humans and search engines. It helps you quickly find and fix errors, improve your site's structure, and boost your search rankings. Ultimately, this will positively impact traffic growth and customer acquisition.

Ahrefs for URL Slug Analysis and Website SEO

Ahrefs is one of the most popular and powerful SEO tools available today. It helps website owners and marketers analyze external and internal links, as well as the health of their URLs. Among other things, it can also be used to make your URLs and slugs more understandable and effective for search engines and users.

With Ahrefs, you can find out which pages on your site have the most backlinks. This is a truly important indicator of authority and popularity. The more high-quality links pointing to a page, the higher its chances of ranking well in search results. For example, if you have a travel website and a page with the address https://travelblog.com/italy-rome has many links from other resources, search engines will consider it more important and show it more often in response to a related user query.

Furthermore, Ahrefs, like the other services mentioned above, helps identify broken links and redirects to non-existent pages. This is important because such errors negatively impact user experience and lower your site's ranking. If your electronics store has a link to a product that no longer exists, Ahrefs will highlight this issue, allowing you to quickly fix it by redirecting users to the current page.

Ahrefs can also identify duplicate content and technical issues that may be hindering your site's ranking. For example, if you have multiple pages with similar URLs, the service will highlight this and suggest adjustments. You can set up canonical tags or redirects to avoid traffic dilution and improve SEO. Another important feature of Ahrefs is its analysis of keywords found in URLs and page content. This information will allow you to optimize your remaining URLs and improve your site's visibility.

Use this service if you want to make your URLs and slugs as user-friendly and effective as possible for both humans and search engines. It allows you to quickly find and fix errors, improve your site structure, and improve your search rankings. This ensures effective promotion of sites to the top of search results.

Most Common Errors in URL Slug Generation

Now we'll take a closer look at the errors most often detected in practice by the above-mentioned services when analyzing URL slugs.

Encoded URLs

When creating page addresses, situations often arise where slugs contain characters that are not intended for direct use on the internet. These can include spaces, Cyrillic characters, or special characters. For example, if you run a travel blog and create a page with the address https://travelblog.com/отдых-в-сочи, the Cyrillic in the URL slug will be automatically encoded by the browser or server, and the address will become https://travelblog.com/%D0%BE%D1%82%D0%B4%D1%8B%D1%85-%D0%B2-%D1%81%D0%BE%D1%87%D0%B8. This type of link looks complex, is hard to read, and can discourage visitors.

Furthermore, encoded characters like %20 (a space), %D1%82 (a Cyrillic letter), or other special characters make the URL longer and more difficult to understand. Users won't be able to understand the page before visiting it, and search engines may have difficulty indexing it. Sometimes servers don't process such requests correctly, leading to 404 errors or broken links, which directly impacts traffic and website rankings. Often, such errors occur accidentally: someone copies a page title with Cyrillic characters or leaves spaces between words.

To avoid such problems, follow these guidelines:

- Always use Latin letters and hyphens to separate words in a slug;

- Avoid spaces, underscores, special characters, or Cyrillic characters;

- Check page URLs using the services mentioned above and correct any errors as quickly as possible;

- Make sure your slug is short, clear, and reflects the essence of the page.

If you identify and correct such issues promptly, your site will look professional to both bots and regular users, and its pages will quickly rank high in search results.

URLs with extra characters Special characters

When creating page addresses, sometimes extra characters accidentally appear that are unnecessary for both users and search engines. These can include the %, &, +, {} symbols, and other elements. For example, if you have a cooking website and the recipe address looks like https://cooksite.com/recipes/%best+salad{}, such a URL immediately raises questions: what are these characters and can you trust this page?

Such errors often occur when someone copies a title with extra characters or uses automatic address generation. It's also important to understand that search engines prefer hyphens to separate words, and extra characters can confuse robots and lead to indexing errors.

To make URLs clean and easy to use, follow these guidelines:

- Use only Latin letters and hyphens to separate words;

- Avoid unnecessary special characters, except for those allowed: / - + ? = & #;

- Avoid underscores, replacing them with hyphens (-) to ensure search engines separate words correctly;

- After making adjustments, be sure to set up 301 redirects from old URLs to new ones to avoid losing traffic and maintain your site's rankings;

- Update all internal and external links to avoid broken URLs and lost visitors.

If you identify and correct such errors promptly, your site will look professional, and your pages will rank faster in search results and attract more people.

URL with double hyphens

Sometimes, when creating page addresses on a site, extra hyphens appear, either immediately after a slash, at the end of the address, or even two hyphens in a row. This often happens when the system automatically converts a page or product name into a slug, replacing all spaces and punctuation with hyphens without additional verification. For example, if you have a website about sports and add an article titled "The Best Exercises for Home!", the address might become https://sportblog.com/articles/-luchshie-uprazhneniya--dlya-doma. This shows both a hyphen after the slash and a double hyphen in the middle, which is fundamentally incorrect.

Such errors don't always directly affect a site's search rankings, but they make page addresses less appealing and difficult to understand. A user seeing such a link may be wary, which automatically reduces trust in the site. Furthermore, long and complicated URLs are harder to remember and forward to a friend. Such problems often arise on sites with a large number of products or articles, where the slug is generated automatically.

What can you do to avoid such mistakes? Here are a few simple recommendations.

- Check your URL structure and remove unnecessary hyphens, especially at the beginning, end, and between words;

- Use only one hyphen to separate words to keep the URL slug short and readable;

- After making changes, be sure to set up a 301 redirect from the old address to the new one to avoid losing traffic and maintain your site's ranking;

- Update all internal and external links to ensure users and search engines always land on the current page.

Correcting such minor errors promptly will ensure your pages rank better in search results, which will help attract more visitors and, ultimately, promote your site effectively.

URLs with Capital Letters

Sometimes, capital letters are used when creating page addresses on a website, for example: https://shoponline.com/Products/New-Arrivals. At first glance, this might not seem like a problem—the address looks neat and legible. However, search engines consider addresses like https://shoponline.com/products/new-arrivals and https://shoponline.com/Products/New-Arrivals to be different pages. This can lead to duplicate content, where the same page is accessible via multiple URLs that differ only in case.

This situation is dangerous for SEO: search engines may become confused about which address to prioritize, and some link juice and traffic will be dispersed between the duplicates. As a result, the site's search ranking may decline, and users will be directed to different versions of the same page, making navigation difficult. As with other errors, this problem is especially relevant for large websites where URLs are generated automatically or edited by different employees.

Practical tips in this case include the following:

- Always use only uppercase letters in all URL elements except the domain name;

- Set up 301 redirects from all uppercase URLs to the main URL to ensure all traffic and link equity goes to the correct page;

- Check your website structure and update internal and external links to ensure users and search engines always reach the latest version of the page.

Following these recommendations will make your website more search-friendly, simplify its indexing, and help avoid technical issues with duplicates.

Duplicates URLs

If we analyze all the possible error types, duplicate page addresses are the most common. For example, if we're talking about an online shoe store, the same product, "black sneakers," might be available through different links, such as https://shoeshop.com/catalog/black-sneakers, https://shoeshop.com/sale/black-sneakers, and https://shoeshop.com/black-sneakers. In other words, we have the same product everywhere, but the addresses are different. The problem is that search engines perceive all these addresses as three different pages, even though the content is actually the same.

This situation confuses search bots: they can't figure out which address is the primary one. As a result, the page you want to see at the top of the search results isn't the one you want. Link juice is also dispersed, and no single page receives the maximum benefit. This leads to a decrease in website ranking in search results, loss of traffic, and loss of customers. Most often, duplicates appear due to CMS features, filters, product sorting, UTM parameters, or errors in website setup.

To avoid duplicates, follow these tips:

- Define a canonical URL for each page;

- Add a canonical tag (rel="canonical") to all duplicate pages so search engines know which URL is the primary one;

- Set up 301 redirects from duplicates to the primary URL to ensure all traffic and link equity goes where it should;

- Check and update internal links on your website so they only point to canonical pages: this will help avoid confusion for users and search engines.

By identifying and eliminating duplicates promptly, your site will be better indexed, its pages will rank higher, and users will always find the information they need.

Summing Up

Proper URL optimization and slugs are an essential step for any site that wants to be visible in search results and user-friendly. Clean, short, and clear URLs not only help search engines index your site faster but also help visitors navigate the resource structure more easily. Using keywords in your URL slug enhances the page's relevance and increases its chances of ranking high for relevant queries.

It's important to avoid the typical mistakes we discussed above, namely: avoiding unnecessary characters, spaces, Cyrillic characters, capital letters, and duplicate URLs. Each slug on your site should be unique and accurately reflect the essence of the page. Use only lowercase Latin letters. Avoid making URLs too long or overloading them with dates and details—conciseness and informativeness always win.

For large websites, it's especially important to establish a proper URL structure from the start to avoid indexing issues and traffic loss. If you have to change URLs on an existing site, be sure to set up 301 redirects to maintain rankings and avoid losing visitors.

Remember that URL optimization isn't the only factor for success, but it significantly impacts SEO and website perception. Follow simple recommendations, use error checking tools, and regularly update your URL structure. This will help your site rise in search results, and keep users coming back again and again.

We also want to draw your attention to a tool like mobile proxies from MobileProxy.Space. With it, your website promotion efforts will become as efficient, flexible, convenient, and functional as possible. You'll be able to easily track current trends in your market niche worldwide, while bypassing regional access restrictions. You'll also be able to use automated solutions for parsing data from competitors' websites, launch multiple accounts on social media, and more. More information about the functionality of these mobile proxies can be found in the "Articles" section.

You'll also have the opportunity to test this product completely free for two hours, verifying its advantages for your business. Afterward, our 24/7 technical support specialists will be at your service. More information can be found here here.